Heads up: this is not a technical run through, but more of a conceptual overview. Apologies if you came here looking for a how-to. Hopefully we will have just that in the next few months.

But enough about the past, let’s talk about the future!

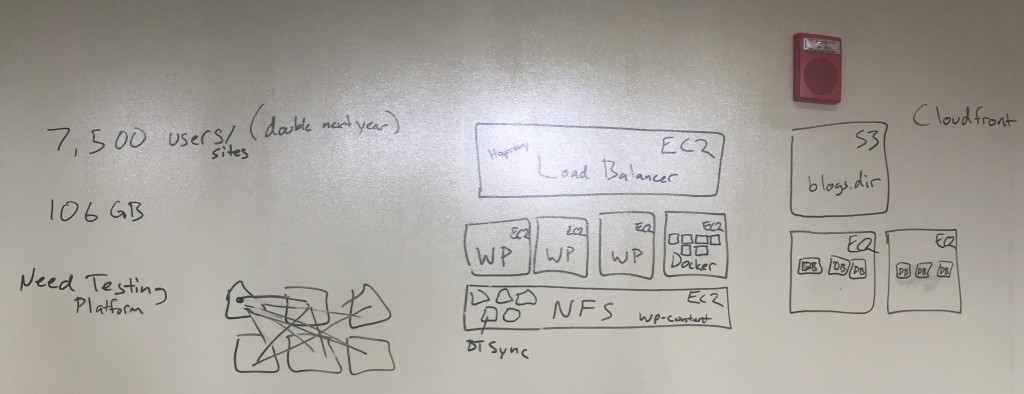

This past week Tim Owens and I went down to VCU’s ALT Lab to meet with Tom Woodward, Jon Becker, and Mark Luetke about the work they’re doing with Ram Pages. I already blogged about a couple of plugins they created for making syndication-based course sites dead simple. We also got to talking about some of the ways we have been using Amazon Web Services (AWS)to scale UMW Blogs. At this point Tim took us to school on the whiteboard explaining a possible setup he has been imagining, which is still fairly experimental.

Don’t let Tim fool you, he is DevOps #4life now. He can be found in his spare time watching presentations about load balancing a site for a billion users or scaling infrastructure for small services like Netflix. I’m becoming more and more interested in infrastructure discussions because they highlight interesting trends in the shifting nature of tech that deeply effects edtech, such as virtualization, containers, and APIs.

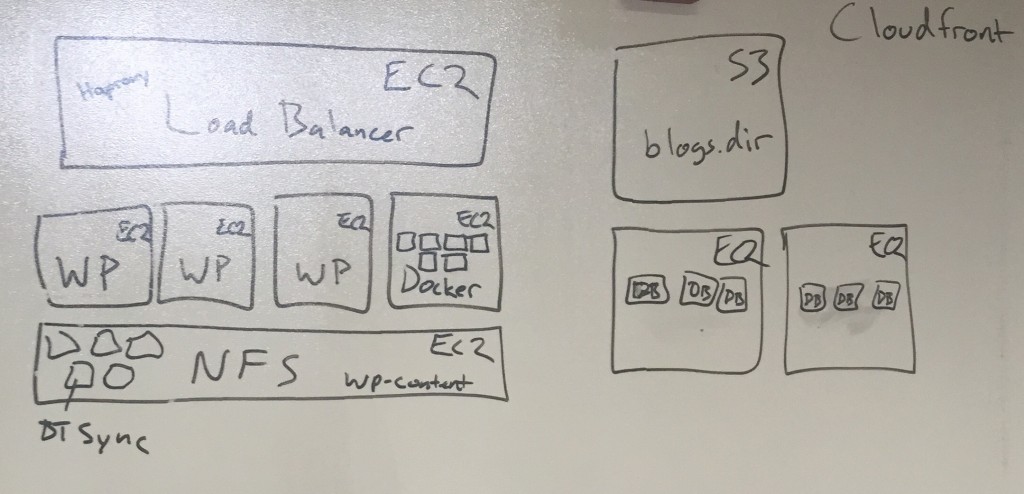

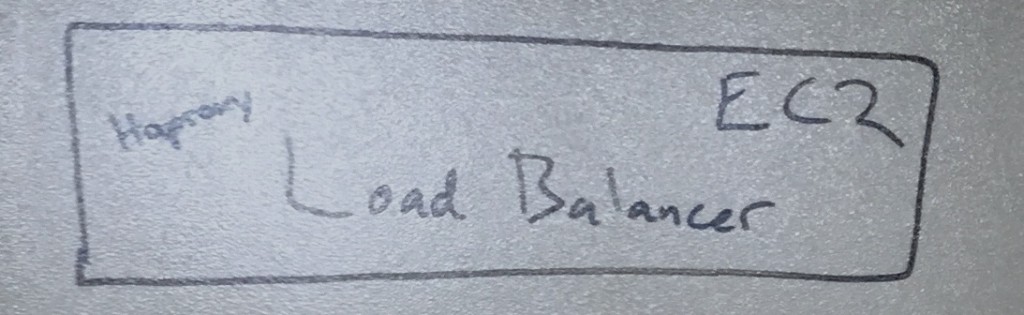

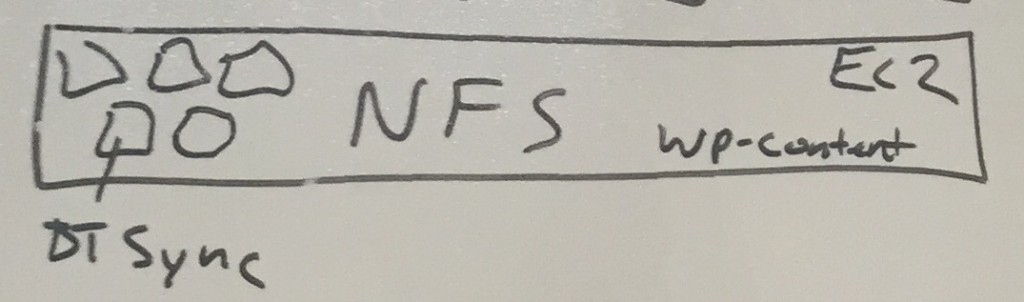

Anyway, the image above is a look at a potential setup for a large WordPress Multisite instance on AWS. It has a couple of elements worth discussing in some detail because I want to try and get my head around each of them. The first is a load balancer that runs in its own EC2 instance.

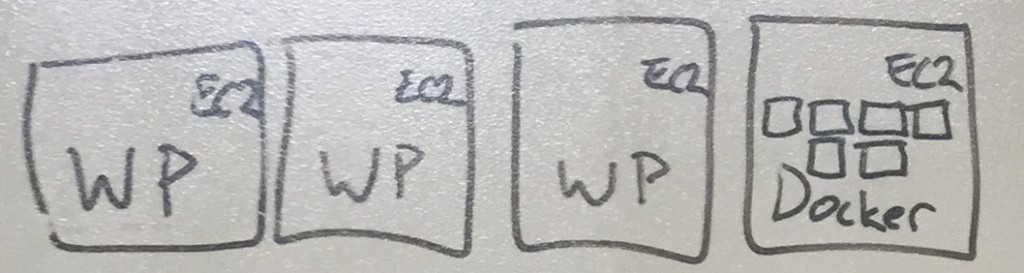

What the load balancer does is direct traffic to the Ec2 instance running the WordPress core files with the least load. So if you have four EC2 instances each running WordPress’s core files, the one with the least usage get the next request. Additionally, if all the instances have too great a load another could, theoretically, be spun up to meet the demand. That’s one of the core ideas behind elastic computing. The load balancer Tim used for UMW Blogs was HAProxy.

As mentioned above, you can setup a series of instances of EC2 on AWS with the core WordPress files save the wp-content directory, which is the only directory folks write to. But you will notice in the fourth instances Tim switched things up. He suggested here that we could have an EC2 instance running Docker that could then run several WordPress instances within it. What’s the difference? And this is where I am still struggling a bit, but from what I understand this allows you to spin up new instances quicker, isolate instances from each other for more security, and upgrade and switch out instances seamlessly. It effectively makes WordPress upgrades in a large environment trivial.

We have yet another EC2 instance that is the Network File Storage, this holds the wp-content files. The uploads, plugins, themes, upgrades, etc. And each of the above instances share this instance. The all write to this, but one of the issues here is that this can be a single point of failure, kinda like the load balancer. So, Tim suggested there is BitTorrent Sync (BTSync), which I still don’t totally understand but sounds awesome. It’s basically technology that synchs files from your computer to spot on the internet, or between spaces in the internet, etc. So, what if we had several bucket where the various instances of WordPress core files were writing the upload files, themes, plugins, etc, and those buckets used BTSync to share between them almost immediately. So then you wouldn’t have a single point of failure, you would have the various instances writing to various buckets of files that would be constantly synching using the technology behind BitTorrent. Far out, right?

BTSync provide ability to immediately copy and synch files across several buckets of the same files that get written to regularly.

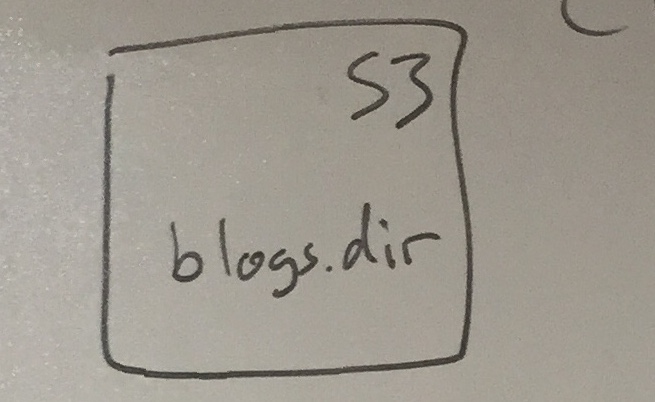

Another option, and I think this was before we started talking about BTSync, but not sure if this would be in possible in addition to BTync, is have the blogs.dir folder for a WordPress Multisite that handle all the individual site files uploaded be sent to S3, Amazon’s file storage service.

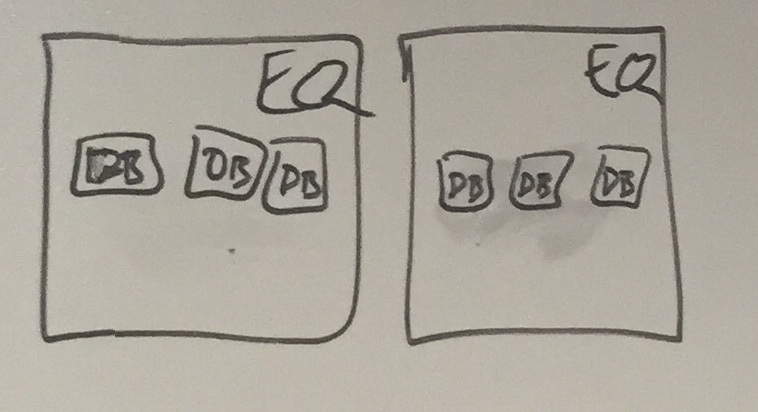

You get the sense that part of what’s happening when you move an application like WordPress Multisite onto AWS, or some other cloud-based, virtualized server environment, is each element is abstracted out to it basic functions. Core files that are read-only are separate from anything that is written to, whether that be themes, plugins, or uploads. Additionally, the database is also abstracted out, and you can run an EC2 instance on AWS with Docker containers each running MySQL (with a sharDB or HyberDB to further break up load) that can also replicate various writes and calls using BTSync? No single point of failure, and you greatly reduce the load on a WPMS which is completely I’m out of my depth here, but if I I accomplished anything here it might be giving you an insight to my confusion, which is also my excitement about figuring out the possibilities.

I have no idea if this makes sense, and I would really love any feedback from anyone who knows what they are talking about because I’m admittedly writing this to try and understand it. Regardless, it was pretty awesome hearing Tim lay it out because it certainly provides a pretty impressive solution to running large, resource intensive WordPress Multisite instance.

I have no idea if this makes sense, and I would really love any feedback from anyone who knows what they are talking about because I’m admittedly writing this to try and understand it. Regardless, it was pretty awesome hearing Tim lay it out because it certainly provides a pretty impressive solution to running large, resource intensive WordPress Multisite instance.

It makes a lot of sense, and you explain in very nice and plain terms the very powerful concept of abstraction! Congrats to you and Tim!! I’d love a class on this.

Damn decent overview! The one thing I would point out is that the reason that blogs.dir is running in S3 and the network file storage (running BTSync or whatever else) is separate is because plugins and themes have to run PHP code and that won’t work with S3 (there are hacks using things like S3FS but it never works well and can be expensive in terms of bandwidth). So S3 is the uploads of all the sites and the network storage is the plugins and themes that need to be shared across all of them.