At Reclaim Hosting we’ve been exploring plugins for offloading media from larger WordPress instances to object storage such as AWS’s S3 or Digital Ocean’s Spaces. There are a lot of good reasons to do this:

- First and foremost, we want to save space on Reclaim Cloud servers given a big WordPress Multisite can eat up a ton of space on uploads alone, and that’s less than optimal given those servers are dedicated with fixed storage for optimal performance.

- If we have to migrate a larger WordPress site, having the files in cloud-based object storage makes that process quicker and easier given no media files need to be moved

- Offloading WordPress media helps ensure there are no issues serving media for a multi-region setup that is distributing traffic across several servers

- And, ideally, it is faster given you should be able to server the media through a content. delivery network (CDN) like Cloudflare that stores/caches media across its vast network making assets quicker to load

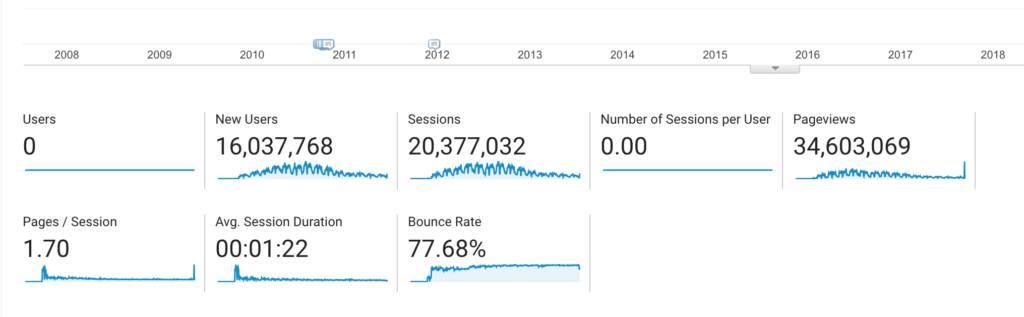

So, with the why out of the way, the next question is the what? As usual, I am using this, the bav.blog, as the initial test run for a single WordPress instance. I’ll be offloading the 10GB of media in the uploads folder on this blog to AWS’s S3. Simulataneously, I’m using ds106 as a test for a small-to-decent sized WordPress Multisite instance to offload media as well, so this is a two-pronged test.

Now to the most interesting question, how? I did some ‘research,’ and WP Offload Media plugin seems to be the most fully-featured option available. For our purposes we are using the Pro version given we need many of the advanced features and plan on rolling this out more broadly for some of our bigger WordPress sites.

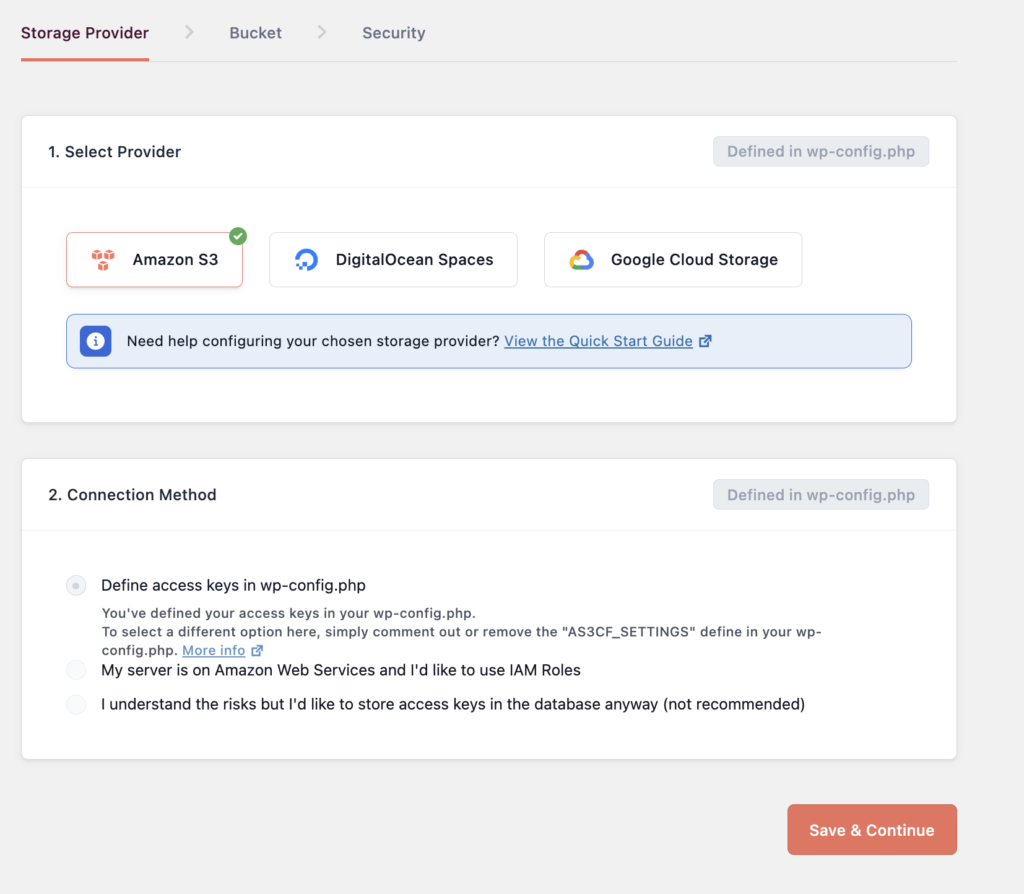

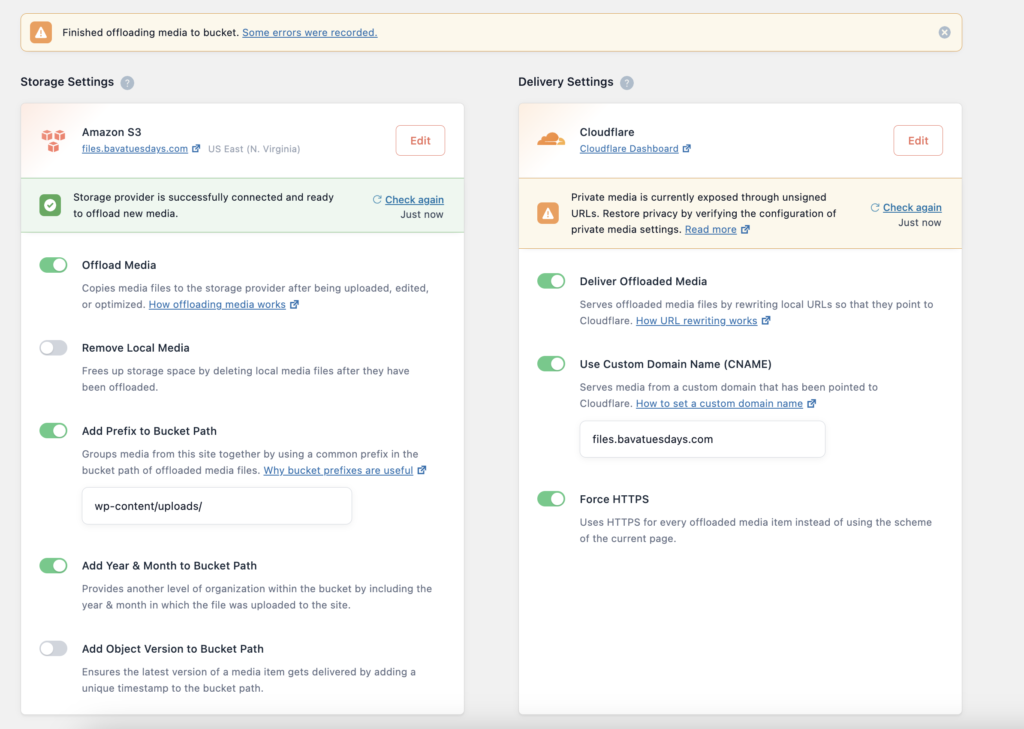

WP Offload Media Plugin Interface

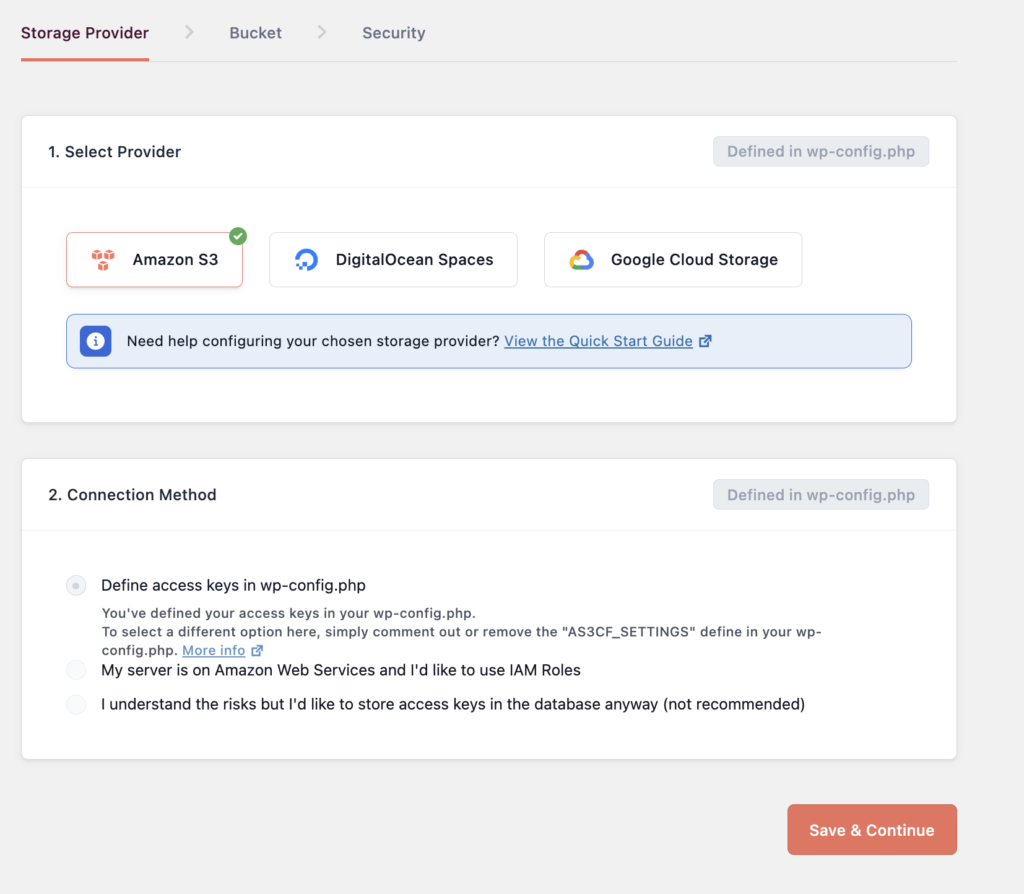

It has integrated tools to help you setup your storage across several S3 service provides, including AWS, Digital Ocean and Google. This is where you instructed how to add your S3 keys, define the bucket, and control the security settings for the bucket.

Storage Provider interface for WP OPffload Media

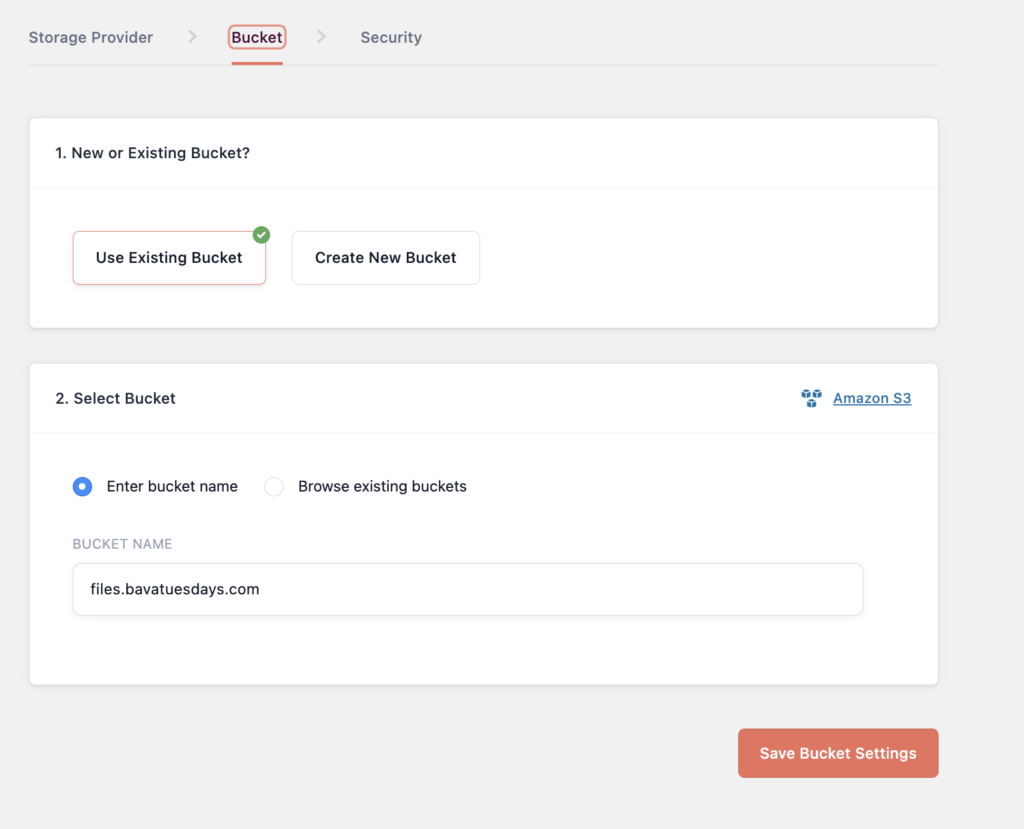

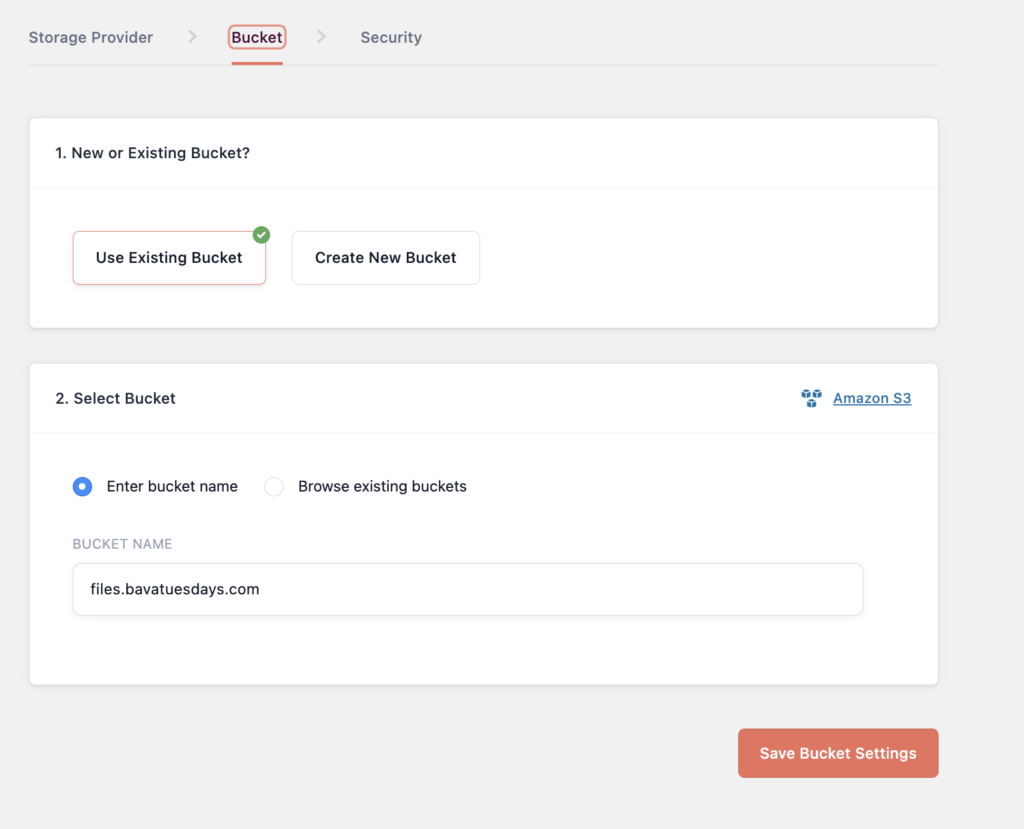

One important thing you need to do if you want the S3 files served over a domain alias is to make sure to name the bucket the same as the alias domain you want to serve files over. For example, for bavatuesdays the files will be served over files.bavatuesdays.com so that is also the name of the S3 bucket files.bavatuesdays.com:

WP Offload Media Bucket Interface

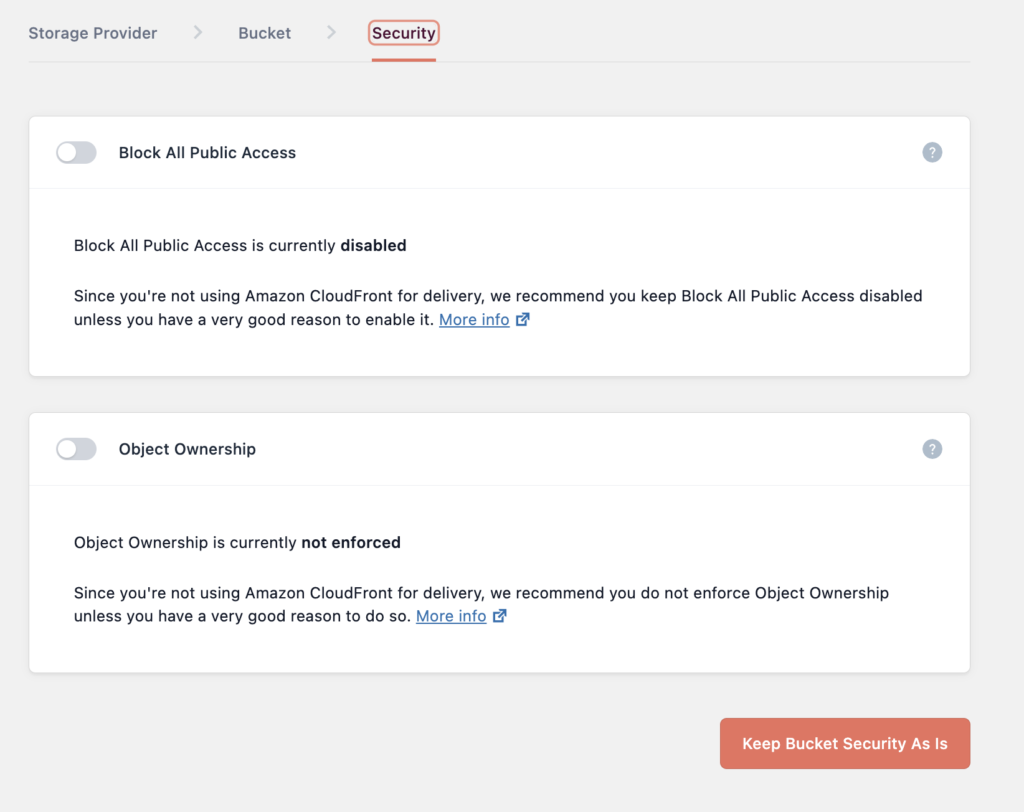

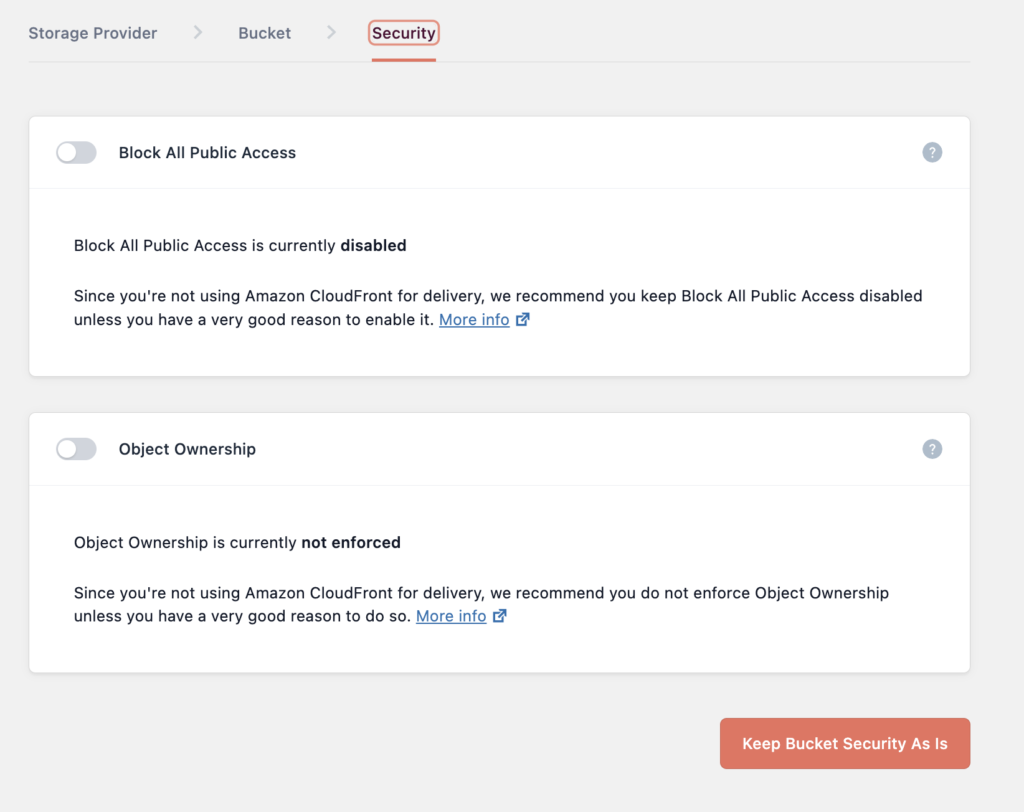

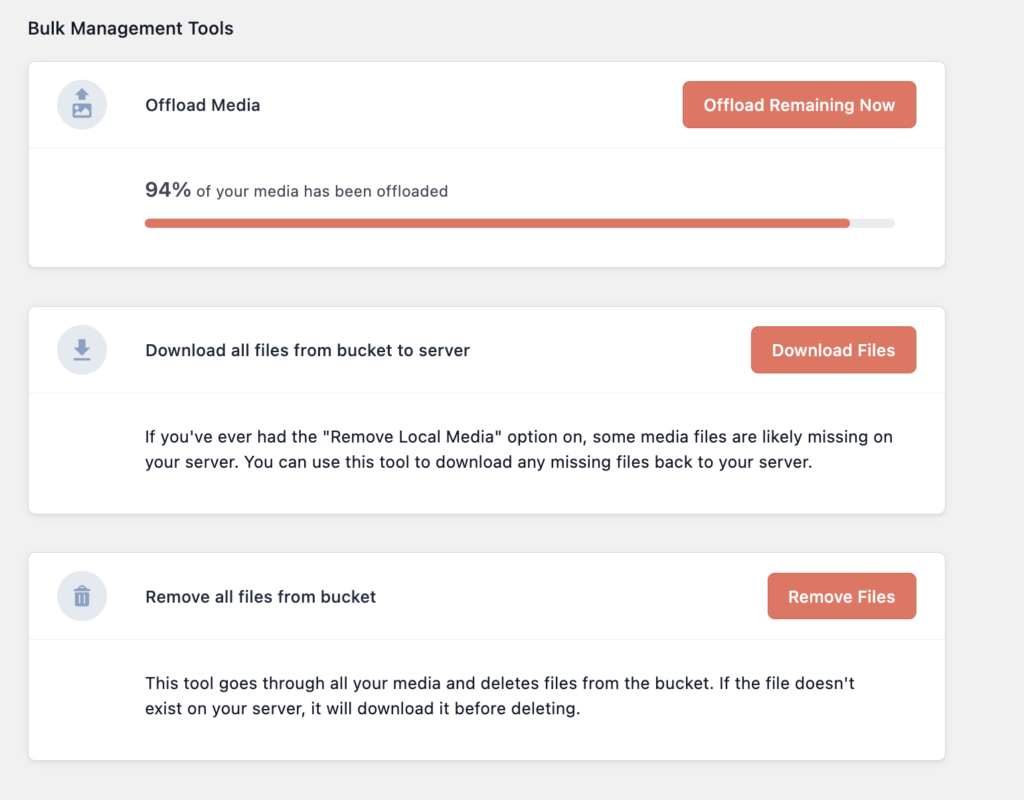

WP Offload Media Security Interface

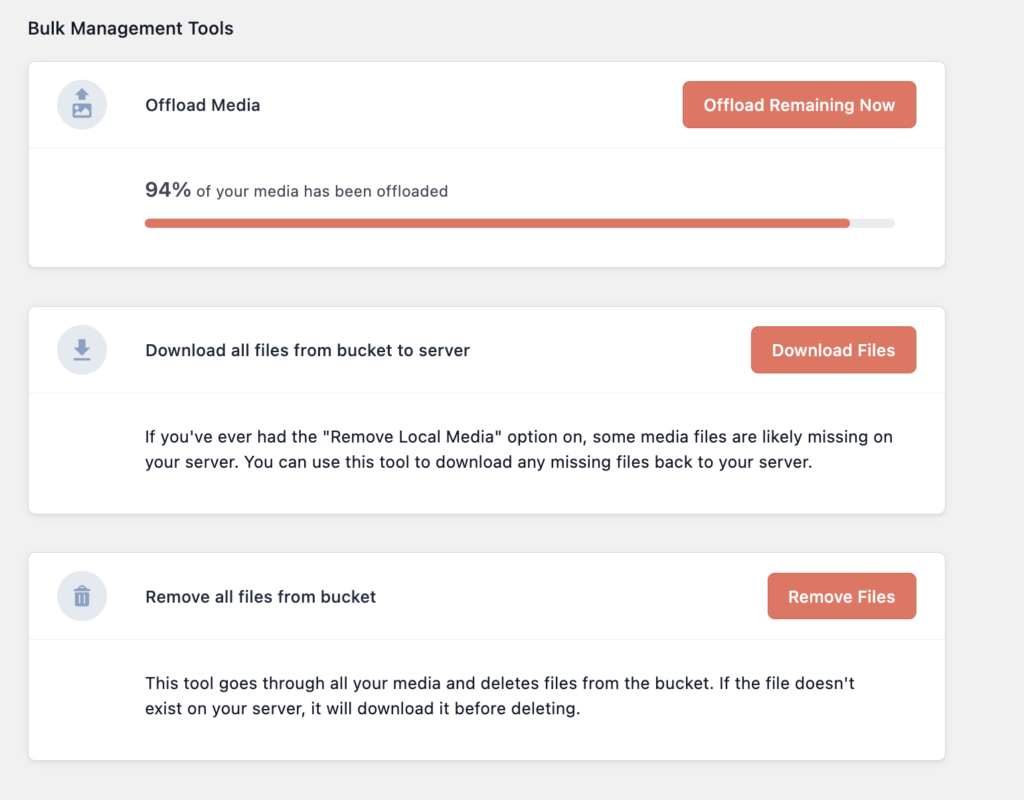

Once your bucket is connected, you can then use the tools available to offload your media to the new storage provider. I found this step took quite a while for bavatuesdays. I had 6,000 files to copy over, and that took several days to complete. It worked, although about 6% of the files had issues that were linked to being in new locations.

I was wondering if I could use the s3cmd tool to sync all the pre-existing files over to the S3 bucket, and then serve the media from there using this plugin without having to go through the offload media tool for previously uploaded media given how long it took on bavatuesdays. I used S3cmd to sync the media files for the ds106 multisite before offloading, and while all the media moved over cleanly, I still needed to run the offload tool through the WP offload Media plugin. While took forever on bavatuesdays, as noted already, it did all 11,000 files for ds106 in about 15-20 minutes, so I am wondering if syncing all files to the S3 bucket beforehand made offloading faster or if the fact bavatuesdays is a the multi-region site caused issues that slowed it down on that site. I’m not sure, but I will definitely have to figure that piece out.

Also worth noting is that the WP Offload Media plugin worked cleanly for the ds106 multisite with various subdomains and at least one mapped domain, which is very good news.

One issue I ran into on bavatuesdays was linked to the fact that I had used the WP Offload Media Lite plugin a few years back as a test. It ran for about a year or so and then I turned it off, but when I re-activated the pro version using a new bucket, namely files.bavatuesdays.com, the database had already stored details about the old bucket bavamediauploads I’d setup previously, so there were some issues. I had to manually remove media from the old bucket through the Media Library and then offload it again to the new bucket. The plugin adds tool to the Media Library for removing and adding media to the S3 bucket, which is nice, and it is possible to bulk select the removal and offload options, so it was not too painful, but this is something to lookout for if you have previously used the plugin.

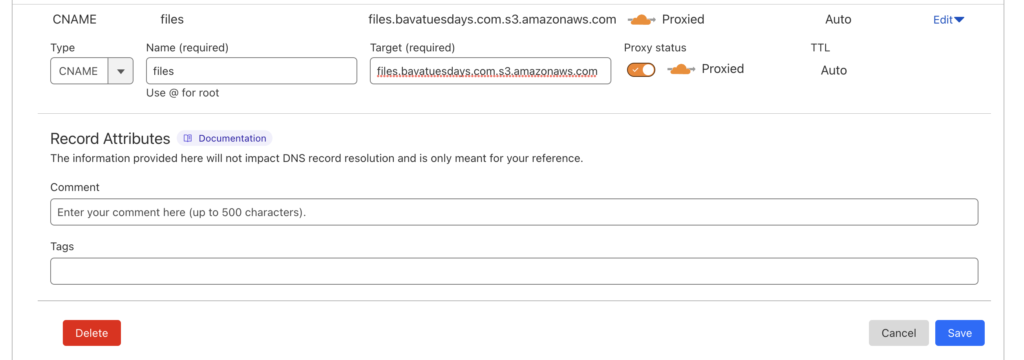

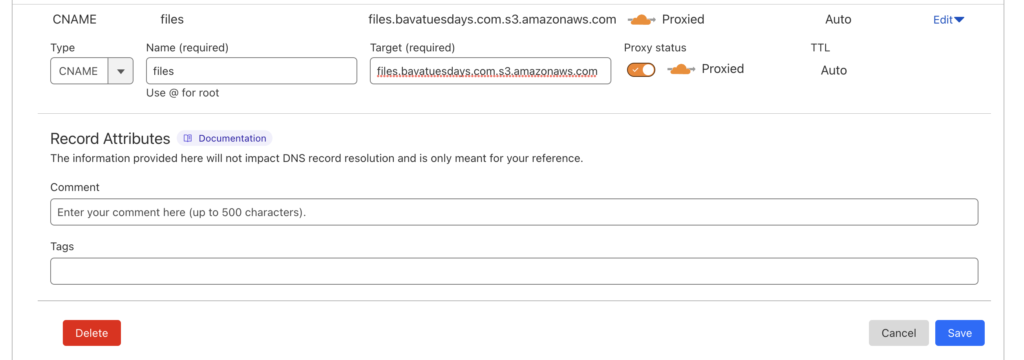

Once I had all the media offloaded cleanly, it was time to test running the delivery through Cloudflare. First thing to do is create a CNAME domain alias to map to the bucket in order to deliver media over the subdomain files.bavatuesdays.com. To do this you create a CNAME in Cloudflare and the value you add is files.bavatuesdays.com.s3.amazonaws.com with the first part of the target before the S3 reflecting the domain alias.

Adding CNAME for domain alias in Cloudflare

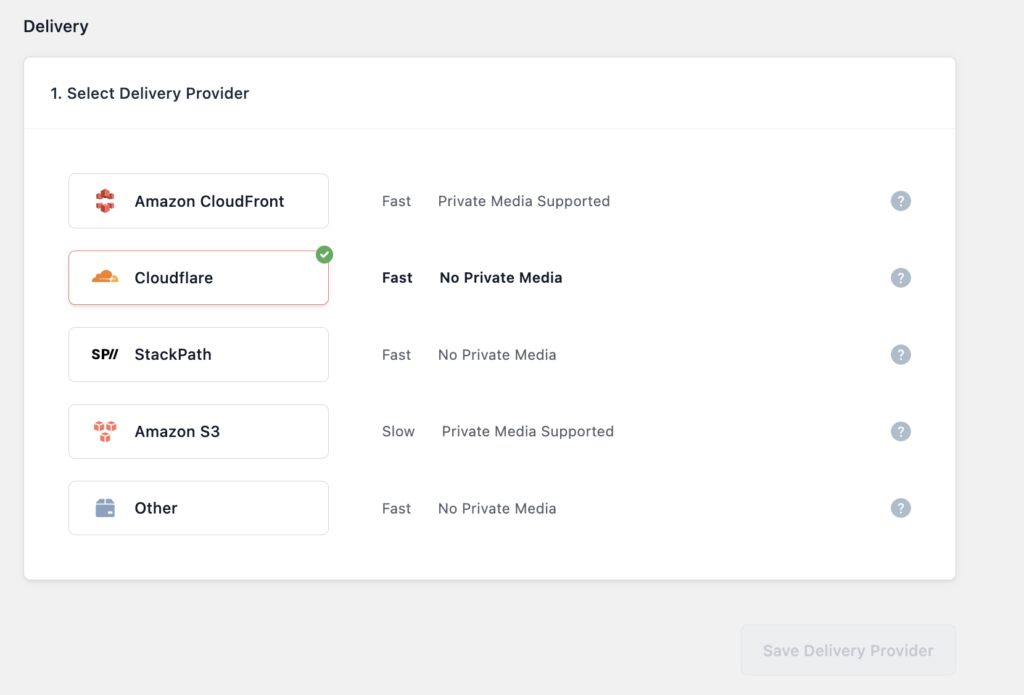

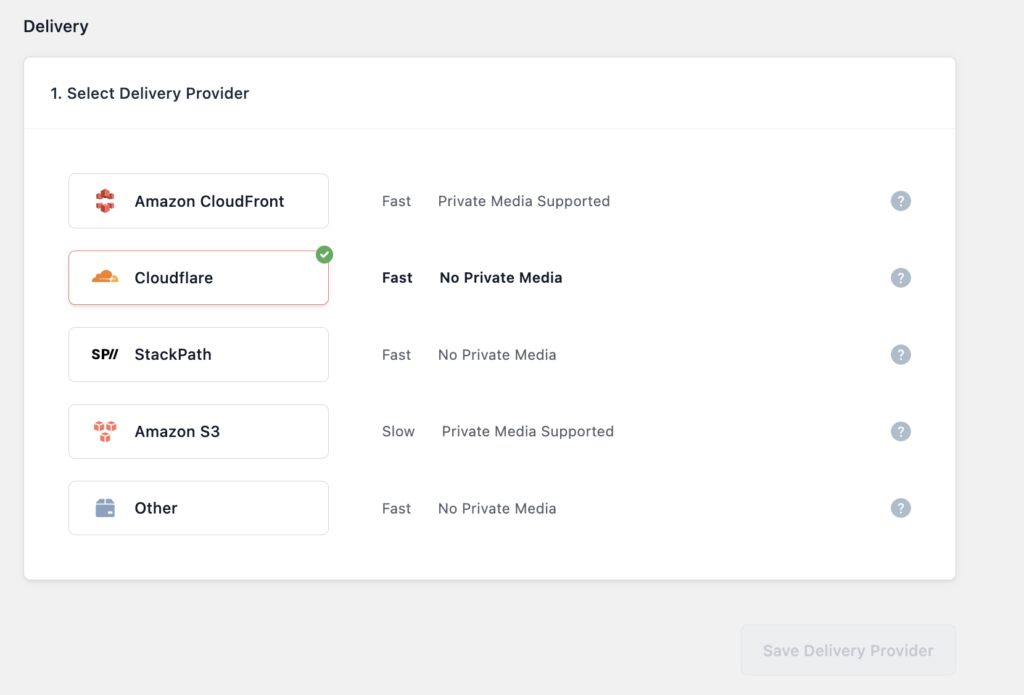

Once that is added go to the delivery section of the plugin and select what provider you will be using, as you might have guessed this blog is using Cloudflare to harness their CDN for faster media delivery.

WP Offload Media selec t Delivery provider Dialogue Box

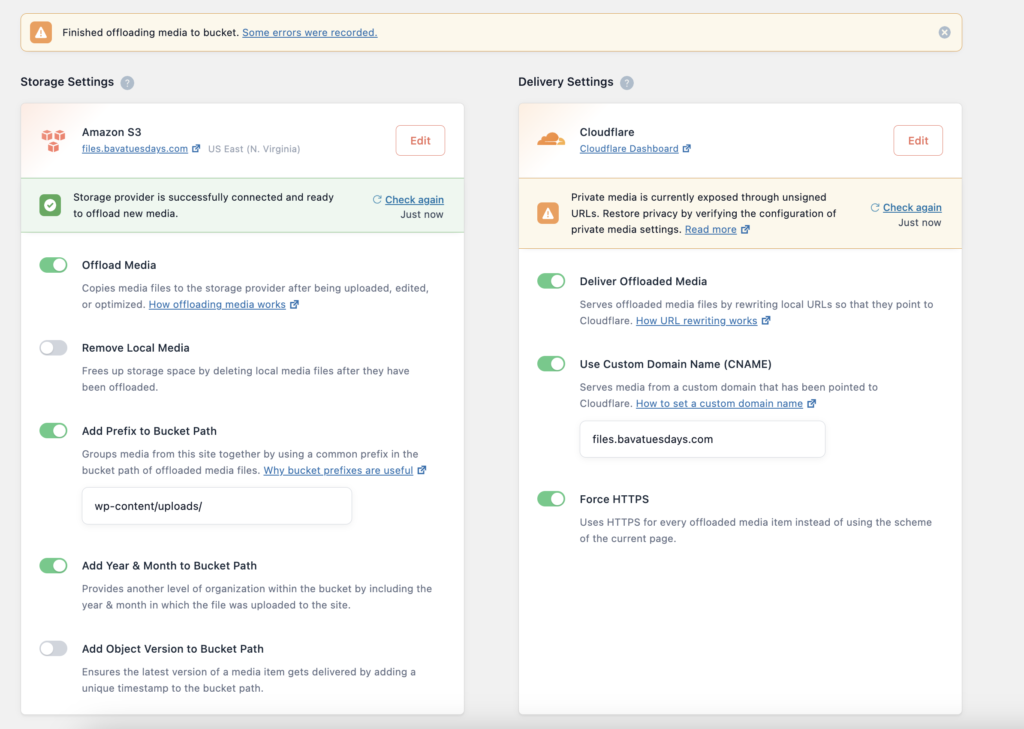

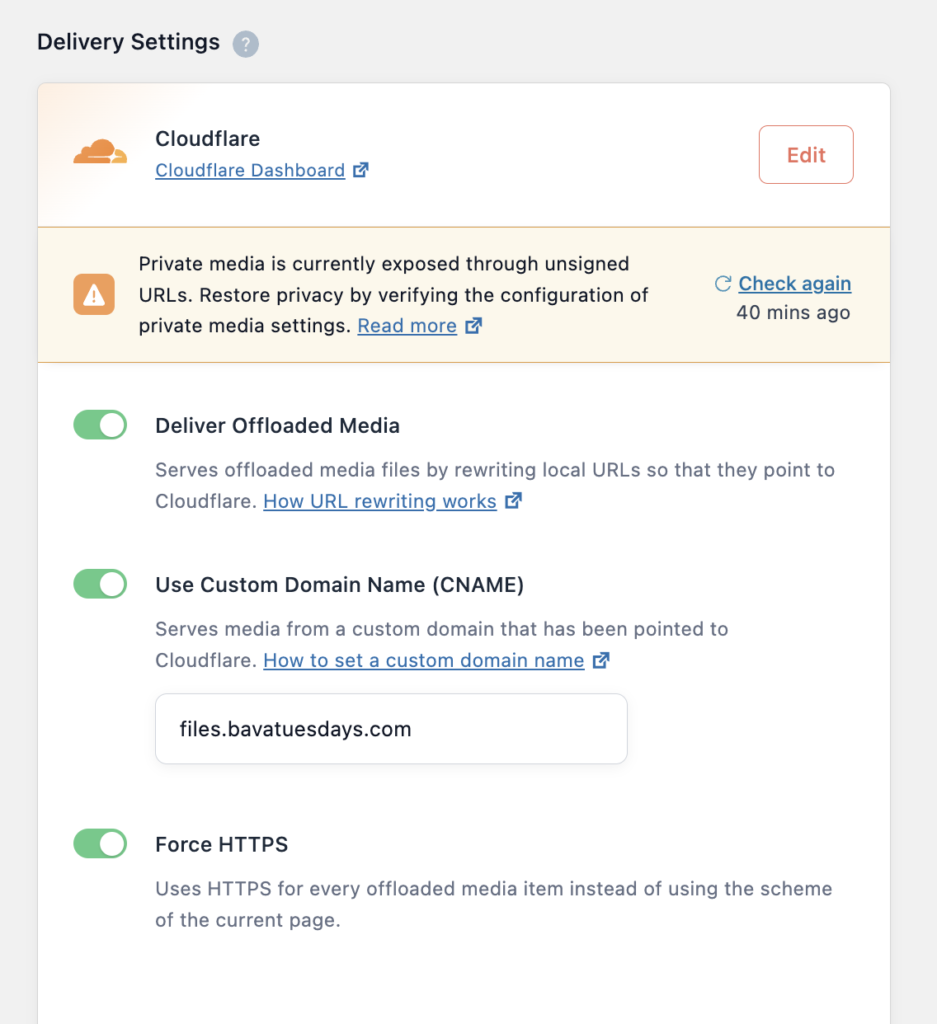

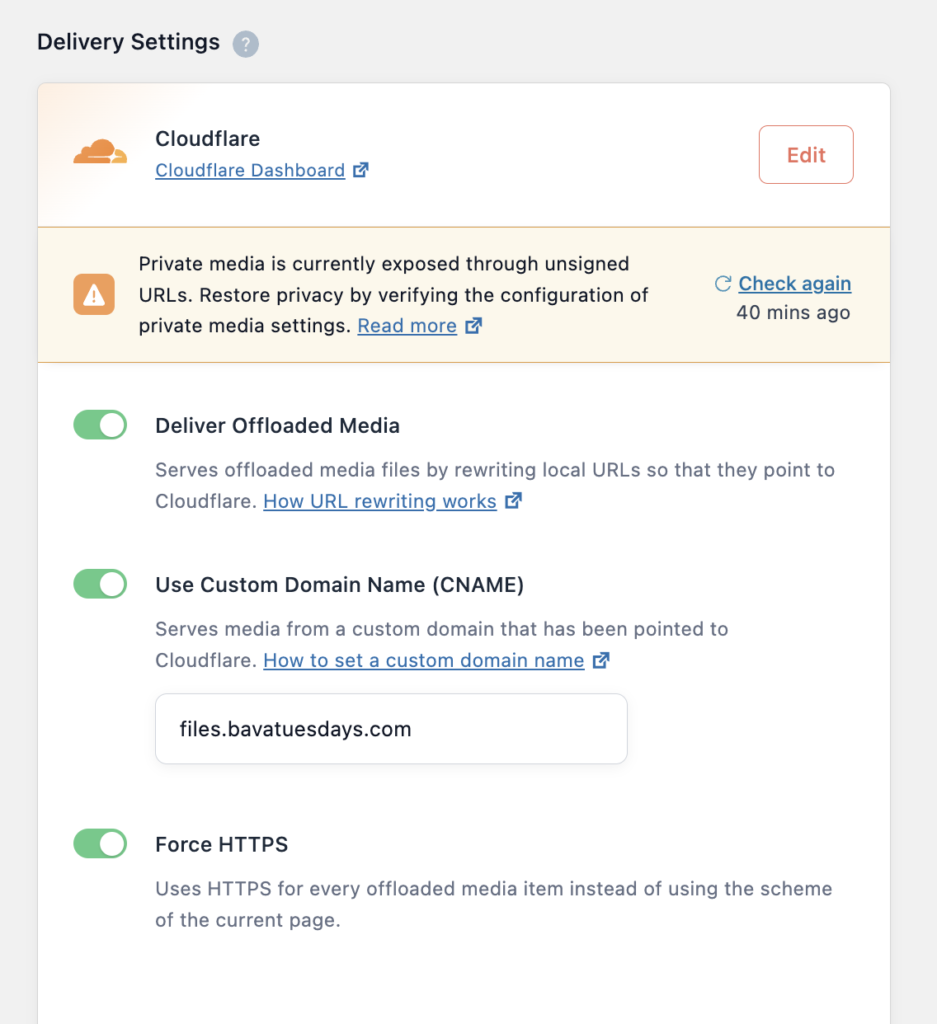

After that, toggle the “Deliver Offloaded Media” button to start delivering media from the S3 bucket, and also toggle Use Custom Domain Name option to enables the alias files.bavatuesdays.com:

WP Offload Media Delivery Options

I also selected Force HTTPS, but not sure that is making a difference given that is already happening on Cloudflare. Also, there’s a private media settings error that I think is mainly linked to AWS’s Cloudfront option, so not sure if it is relevant for this setup through Cloudflare, but will need to verify as much.

I’m also using a similar domain alias on ds106.us as well (files.ds106.us), and it worked identically for the WordPress Multisite setup and is functioning without issues as far as I know. I still have to test some more sophisticated permissions setups for serving media, but all-in-all, I think this plugin really moves us towards being able to offload a significant amount of stored media on our dedicated servers to S3 buckets which should free up terabytes of data on our Cloud infrastrucuture, which would be a gigantic win!