Hope springs eternal in the bava breast. This is the third attempt since November of 2021 to try and get a WordPress Multi-Region setup working on Reclaim Cloud, I blogged attempts one and two in some detail. And I’m glad because returning to it after four short months it’s like I’m starting from scratch, so the blog posts help tremendously with the amnesia—they serve a similar purpose as the polaroids in Memento.

My return to Multi-Region was spurred on by a realization that the documentation provided by Virtuozzo (formerly known as Jelastic) noted the WordPress Multi-Region Cluster installer was a Beta version, whereas the one I’ve been playing with in Reclaim Cloud is still Alpha. This led me to look through the Jelastic marketplace installers (JPS files) on Github to see if there’s more than one installer for WordPress Multi-Region setups, and while I could not find the Beta version of the WordPress Multi-Region Cluster installer, I did find a beta installer for a WordPress Multi-Region Standalone installer. The difference between the two is that the standalone is not creating multiple app servers, databases, etc., within a single environment that is then reproduced across as many as 4 environments in different regions. This significantly reduces the complexity, which gave me some hope that this just might work.

What’s more, I’m already running bavatuesdays in a standalone WordPress environment using Nginx (LEMP) on Reclaim Cloud, so the difference would be this new instance uses LiteSpeed (LLSMP) and replicates the entire instance in one additional data center (there were not options for more that two regions). It is two stand alone instances that are replicated across two different regional data centers. Here is to hoping simpler is better.

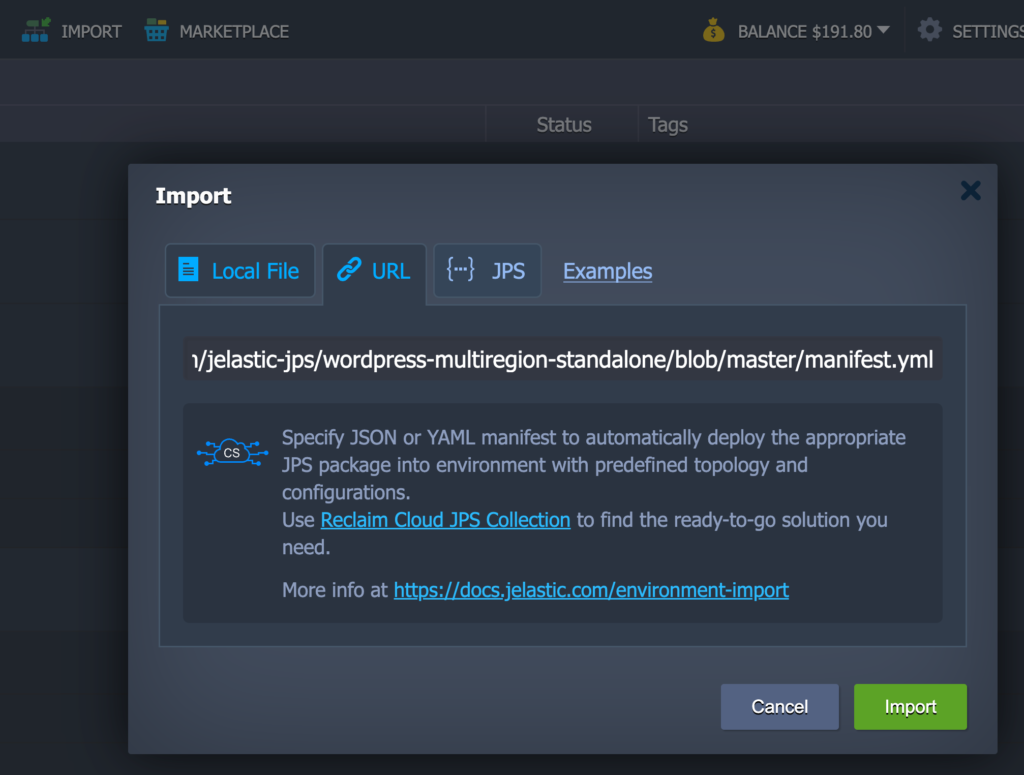

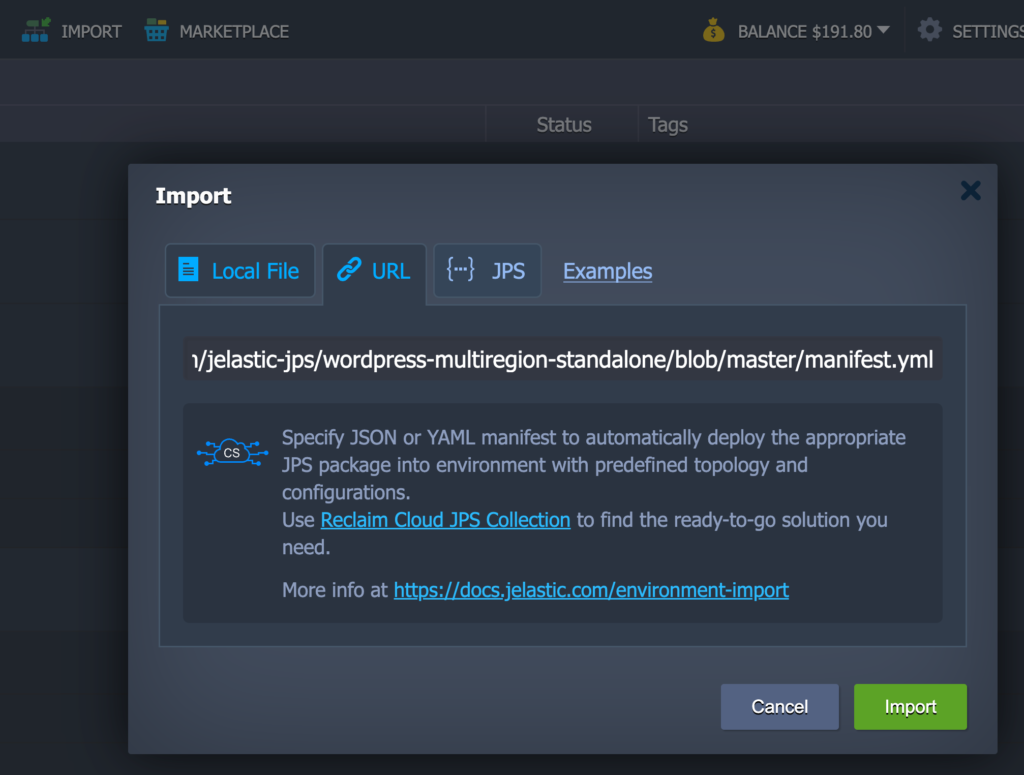

The fact that I am deep into container learning this month helped my confidence a bit, particularly when grabbing the URL for the manifest.yml file that provides the directives for setting this up in Reclaim Cloud. We don’t have the WordPress Multi-Rgion Standalone installer available, but you can still install it by going to the Import option in Reclaim Cloud and copying the manifest.yml URL into the URL tab:

Import tool to grab a manifest.yml file to build out the WordPress Multi-Region Standalone installer

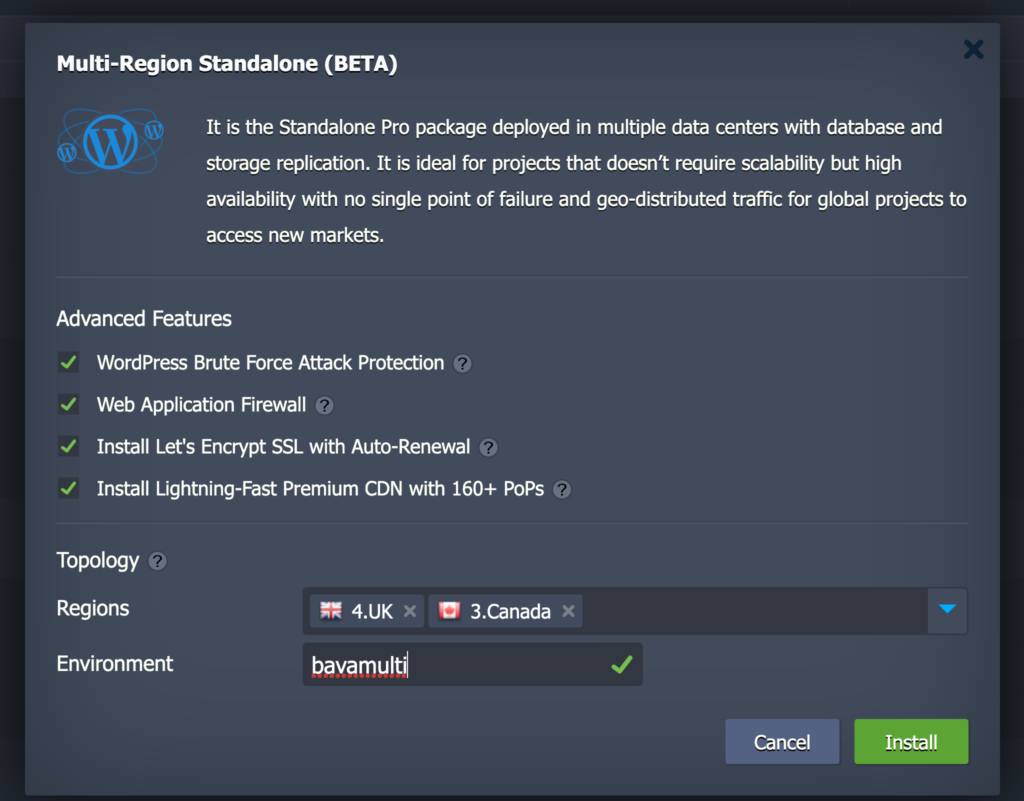

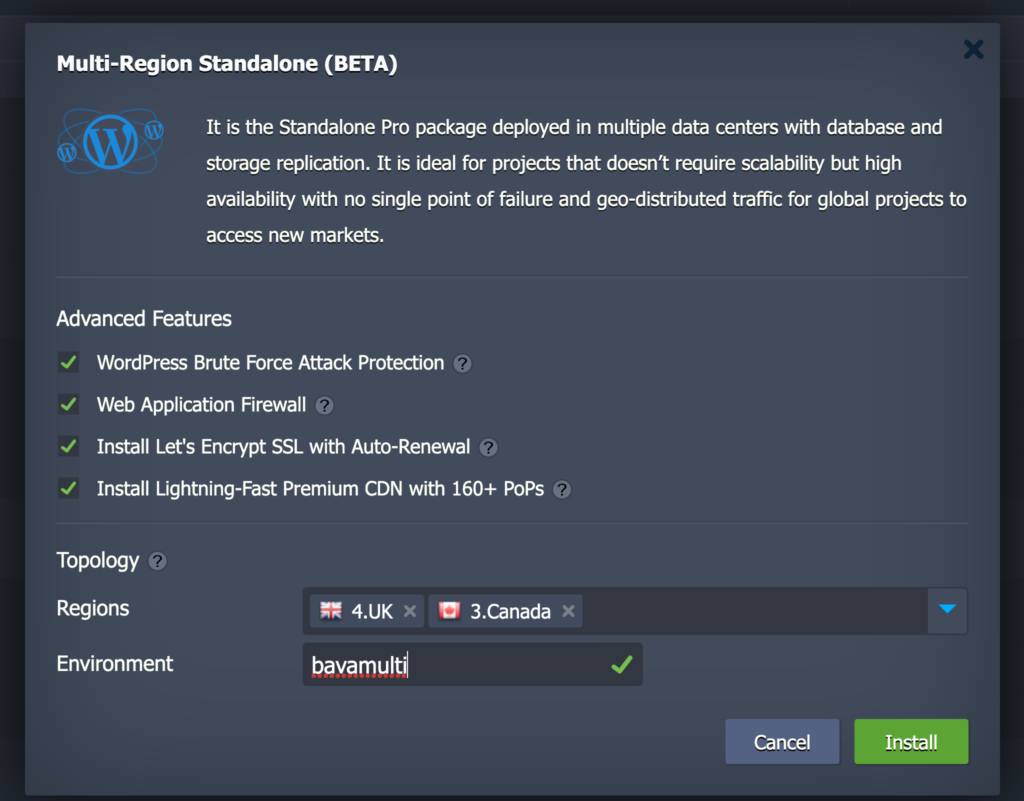

Once you click Import you will be given the options for setting up your WordPress Multi-Region Standalone setup:

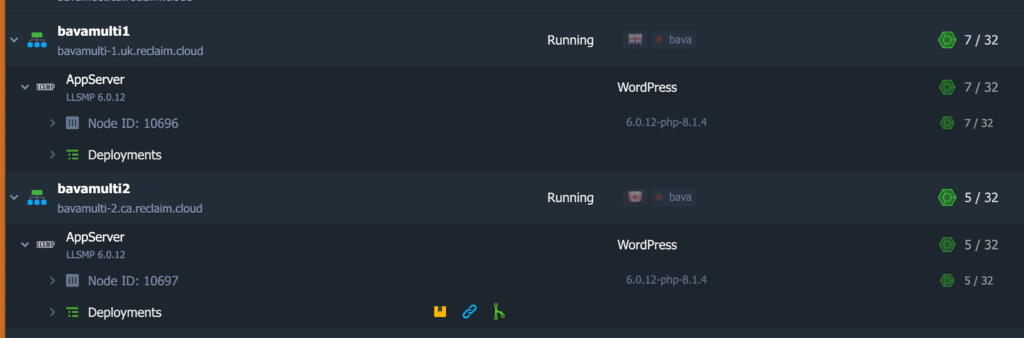

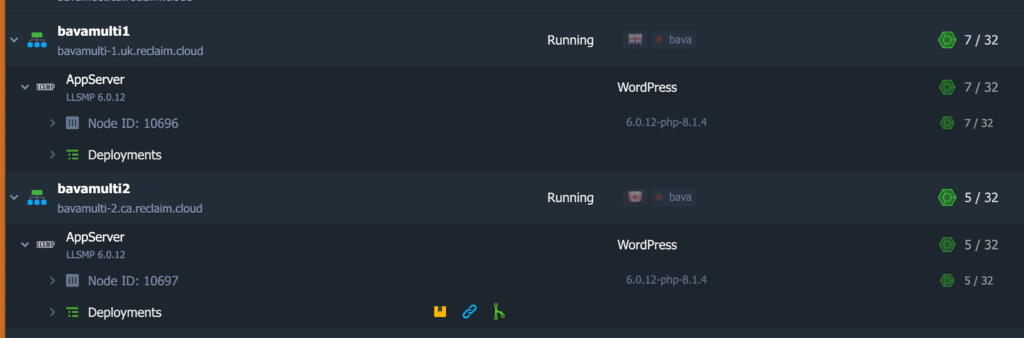

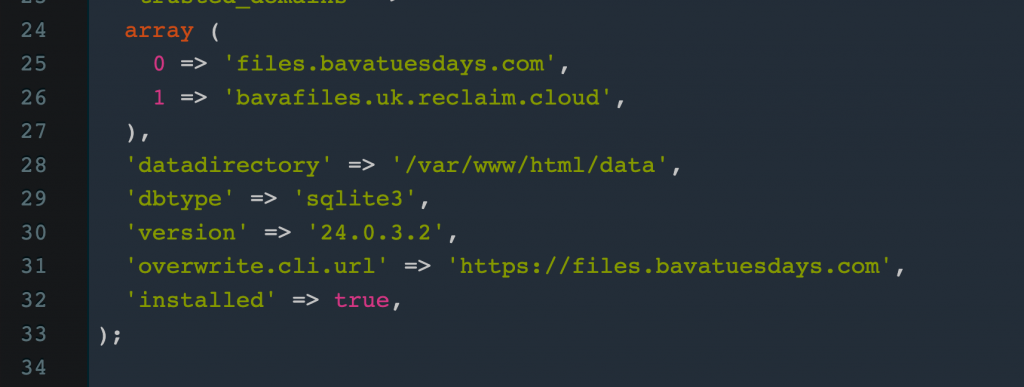

You are limited to two regions with this installer, and the first region you list becomes your primary, but I’m not convinced that matters as much as it does with the Cluster Multi-Region setup. After that, you let the two environments setup, and the URLs of each will be something like bavamulti-1.uk.reclaim.cloud and bavamulti-2.ca.reclaim.cloud.

Once the environments are created you will get an email with the details for logging into the WordPress admin area (same for both setups) as well as LiteSpeed and MySQL database credentials. [Note to self: that is an important email, so be sure to save it.] Once everything is setup you need to do a few things to each environment:

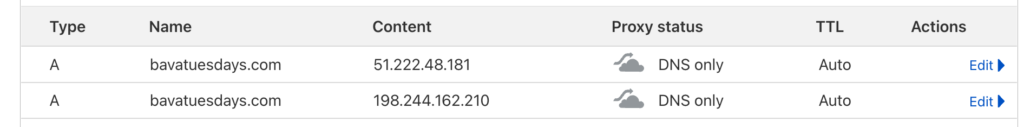

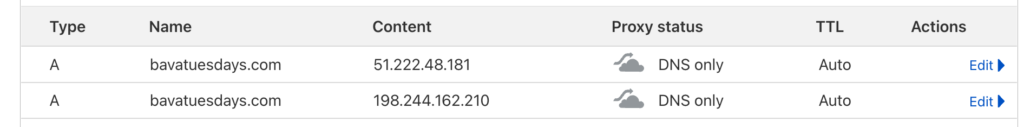

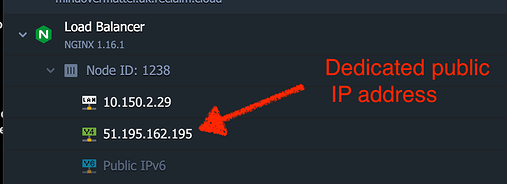

- Make sure you have added two A records for your custom domain. There should be one record for each of the environments public IP addresses. I use Cloudflare for this and it looks something like:

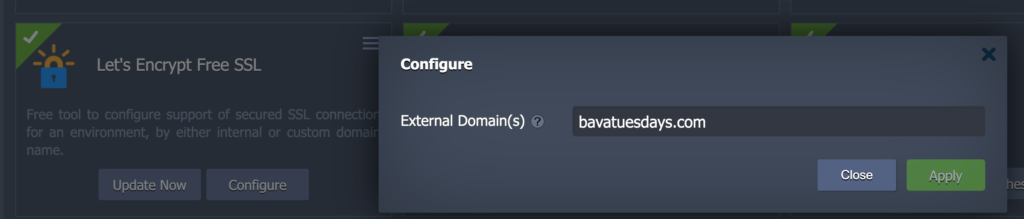

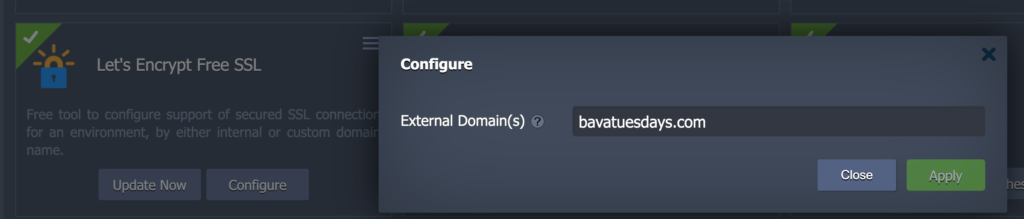

- Add a Let’s Encrypt Certificate for your custom domain in each environment:

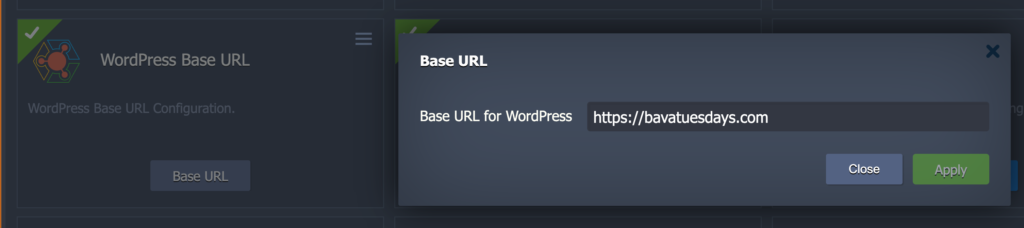

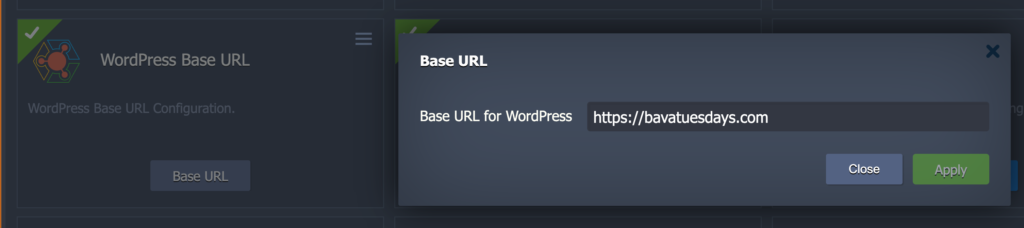

- Update the site URL in both environments using the WordPress Site Address addon:

If you are starting from scratch then you should be good to go at this point with the setup. I had a few extra steps given I’m importing my files and data from an existing WordPress, and to do this I use rsync to copy files between environments and a command line database import given the web-based phpMyAdmin import was consistently timing out.

Rsyncing files between environments has been a bit of a struggle for me in previous attempts, but luckily I documented this process, and finally feel like I have made a breakthrough in my understanding, although I still had to lean on Chris Blankenship for help this time around. Here are the steps:

- Create a key pair on the environment node you are migrating from and copy the public key (file ending in pub) into the authorized keys file on the new environment node you’re moving to. Here is the command I used to create the key pair on the environment node I am migrating from:

ssh-keygen -t ed25519 -a 200 -C "jim_at_reclaimhosting.com" -f ~/.ssh/bava_ssh_key

- Make sure to do everything as root user on the server you are moving to and from, and there’s a Root Access addon for this in the multi-region environment. Also, keep in mind you only need to rsync to one of the two multi-region environments you created, I chose to copy files and import the database to the bavamulti-1.uk.reclaim.cloud environment.

- For rsyncing I added the keys successfully, did everything as root, and still ran into an issue using the following command to rsync. Turns out that the multi-region WordPress has an application firewall built-in that was blocking access to the public IP address, so I needed to use the private IP LAN address instead, which worked!

rsync --dry-run -e "ssh -i ~/.ssh/bava_ssh_key" -avzh /var/www/webroot/ROOT [email protected]:/var/www/webroot/ROOT

If no luck, try:

rsync --dry-run -e "ssh -i ~/.ssh/bava_ssh_key" -avzh /var/www/webroot/ROOT [email protected]:/var/www/webroot/ROOT

-

- After that I needed to access phpMyAdmin on the old site and download the database and then upload it to the bavamulti-1.uk.reclaim.cloud environment. I tried importing via the phpMyAdmin interface but it timed out, even when I compressed the file it was still taking too long. So I uploaded the sql file to the new multi-region environment and used the following mysql database import command:

mysql -u username -p new_database < data-dump.sql

And that worked perfectly and everything was imported and the site was immediately running cleanly in its new home. I checked if the files and data had been replicated to bavamulti-2.ca.reclaim.cloud (the Canadian based environment) and I was happy to see that it happened almost instantly. So you only need to import to one environment and all files and data are immediately copied to another, which is exactly how you want multi-region to work.

The next test was turning off one of the other environments to see if the site stays online, and that worked as well. So far it looks like a success. One of the issues I had with the Multi-Region cluster was getting new comments and posts to populate cleanly across all regions if one of the environments was down during the posting, so will need to test that on the comments of this post, while also making sure one of the servers is down when I publish this.

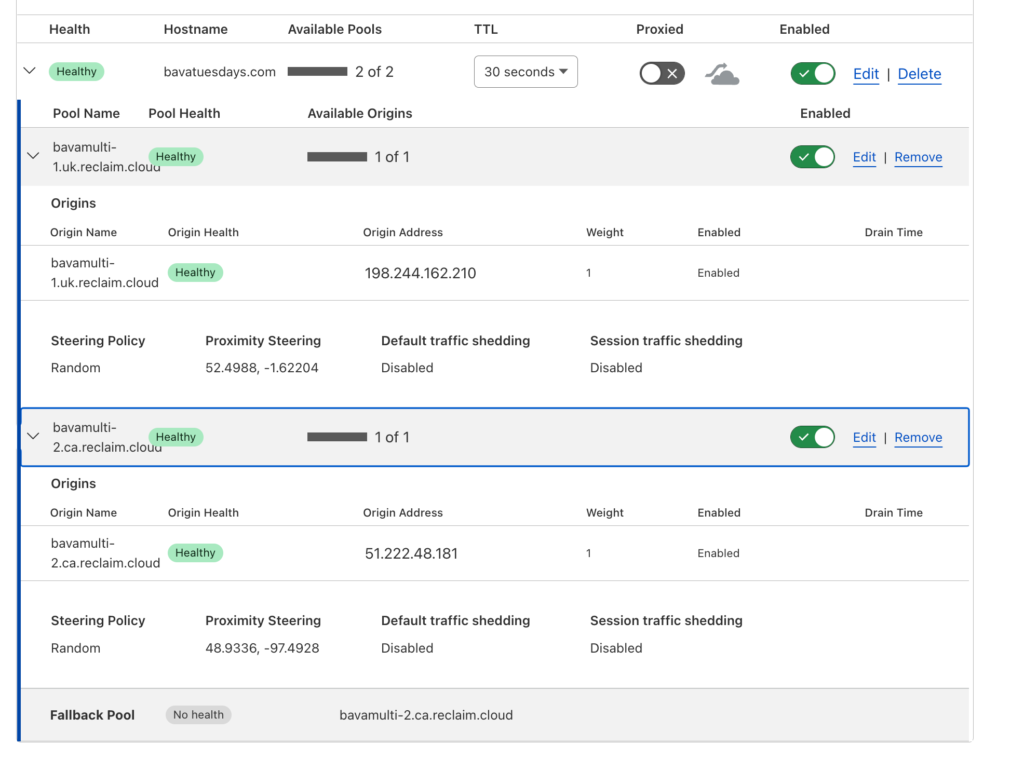

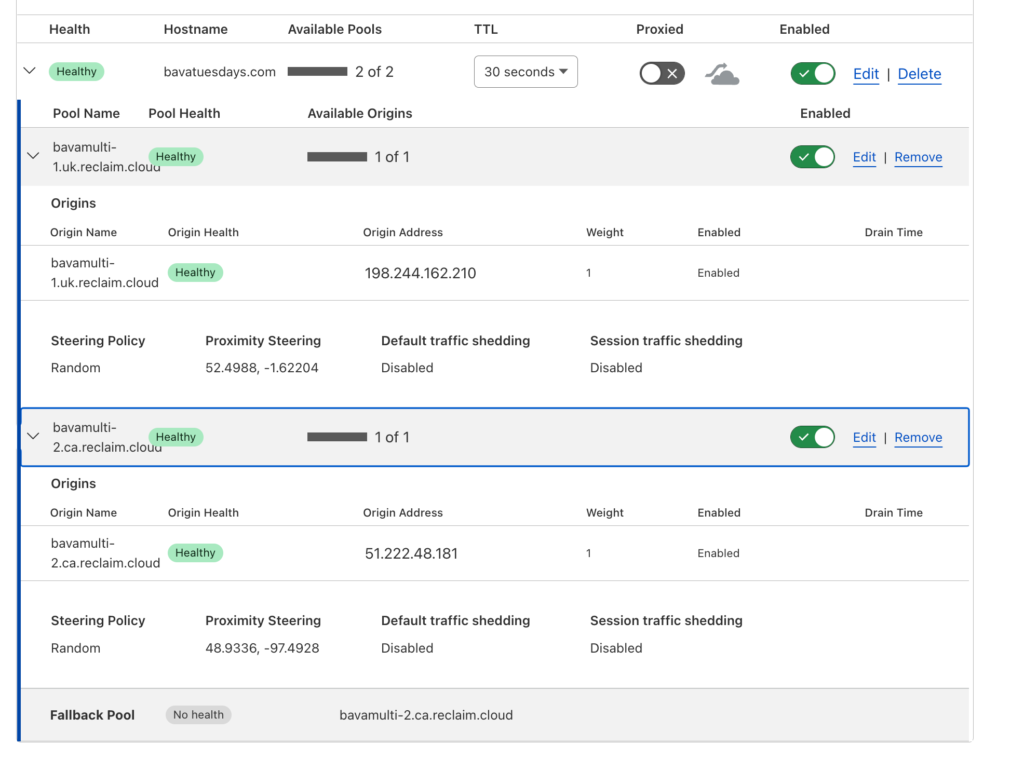

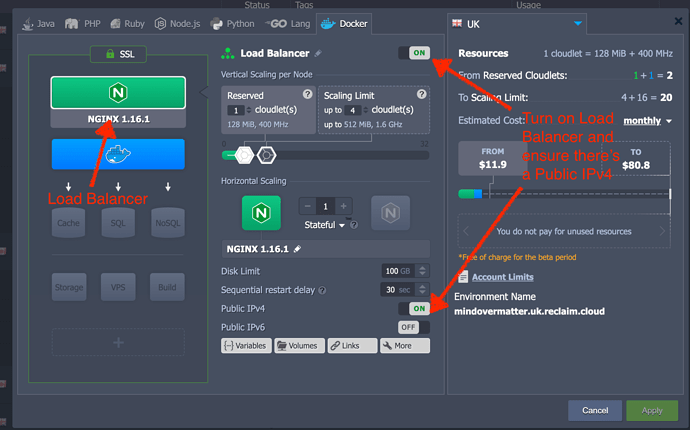

I decided to re-visit some of my previous work in Cloudflare setting up a load balancer and monitoring the two environments to accomplish a few things:

- Ensuring that if one of the two servers goes down I am notified

- Steering traffic so that visitors closest to the Canadian server get directed there, and visitors in Europe get pushed to the UK server.

- Testing load balancing to ensure if one of the two environments goes down the online server is the only available IP so that there are no errors for incoming traffic

All of these details are laid out beautifully in this post on the Jelastic blog about load balancing a multi-region setup, so much of what I will be sharing below is just my walking through there steps.

In Cloudflare under Traffic–>Load Balancer for a specific domain you can create a load balancer that allows you to define pools of servers that can be monitored so that when downtime is detected you not only get an email, but they redirect all traffic from the server with an issue to a server that is online.

Cloudflare load balancing

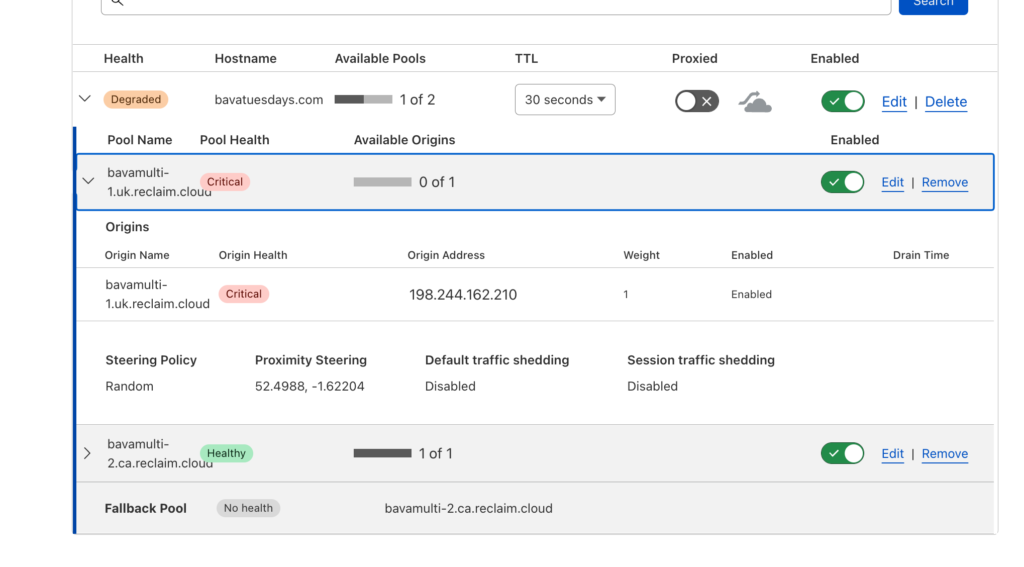

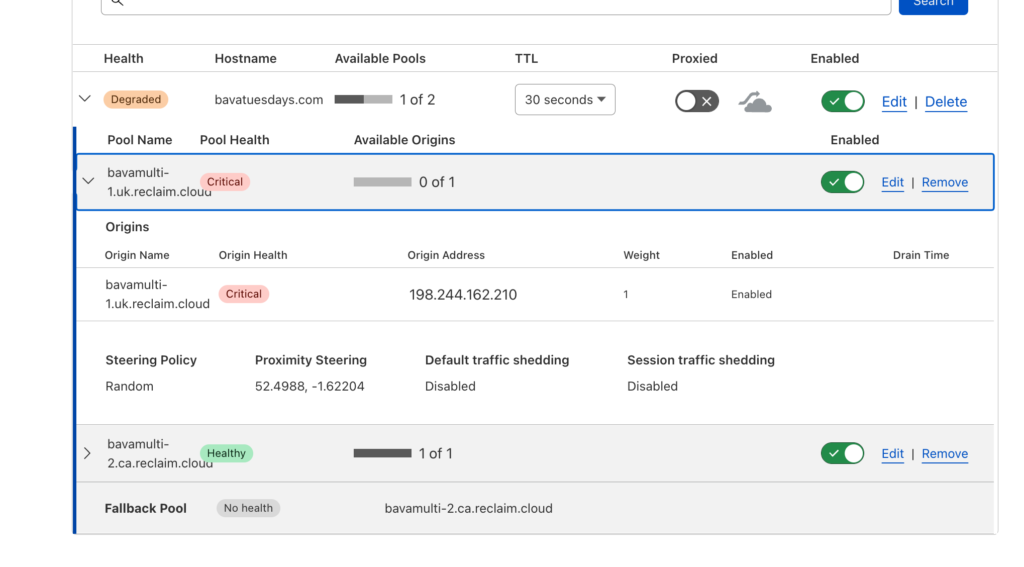

In the image below you can see that the UK environment is reported as critical, meaning it’s offline. In that scenario all traffic should be pointed to the healthy server in Canada.

View of a server with an issue in Cloudflare load balancer

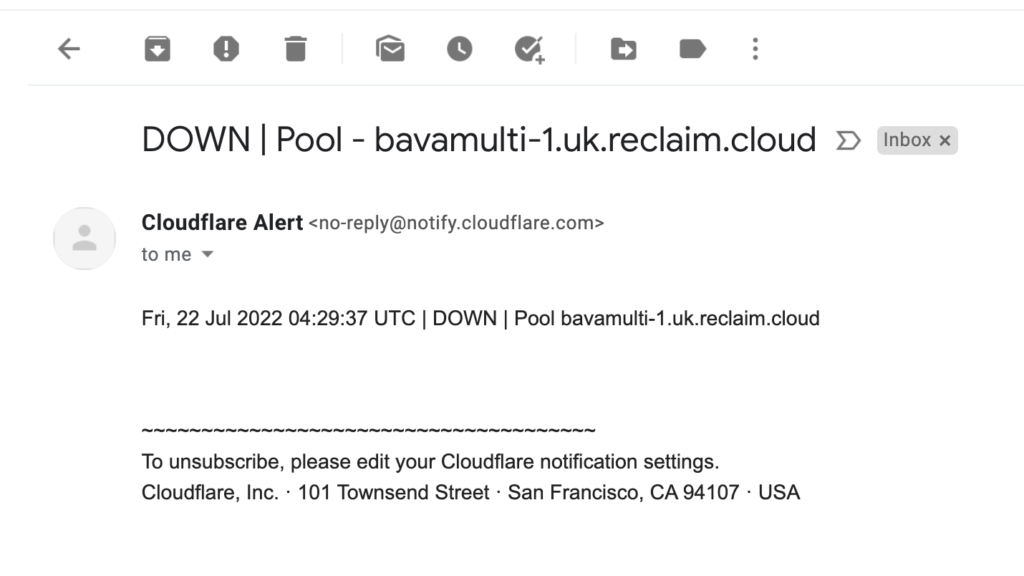

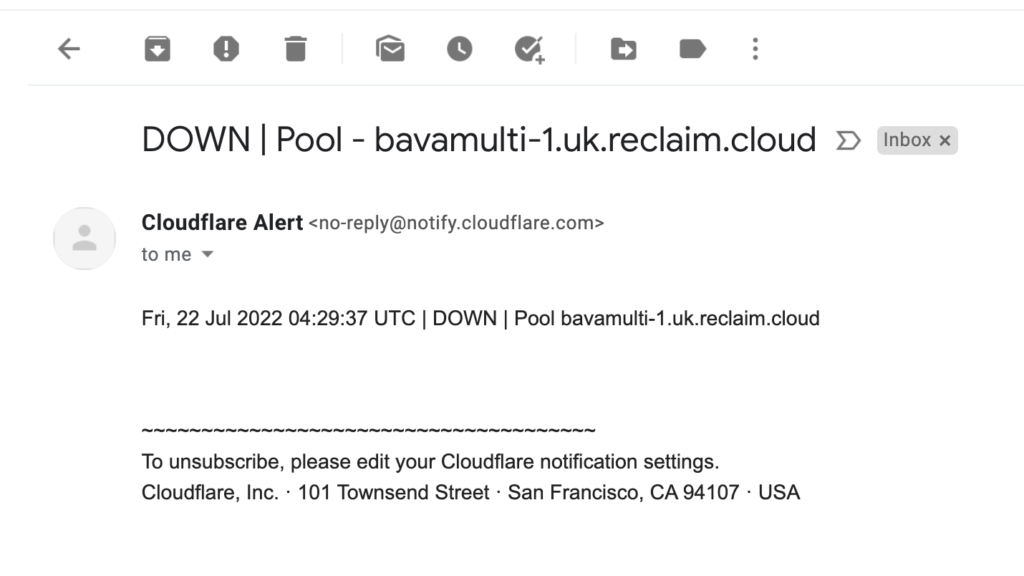

I can also confirm the email monitoring works:

Example of a monitoring email from Cloudflare notifying me of issues with a server

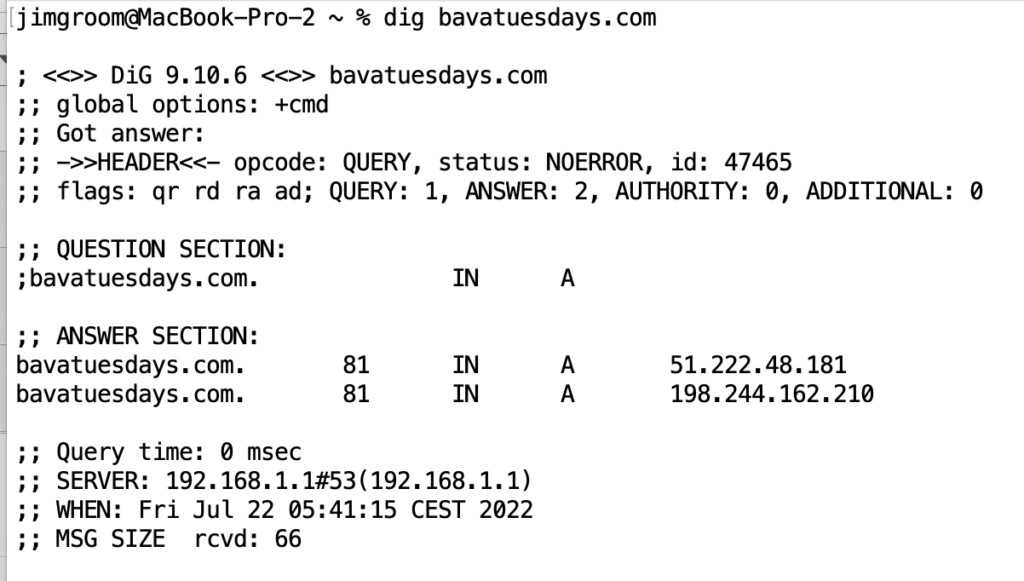

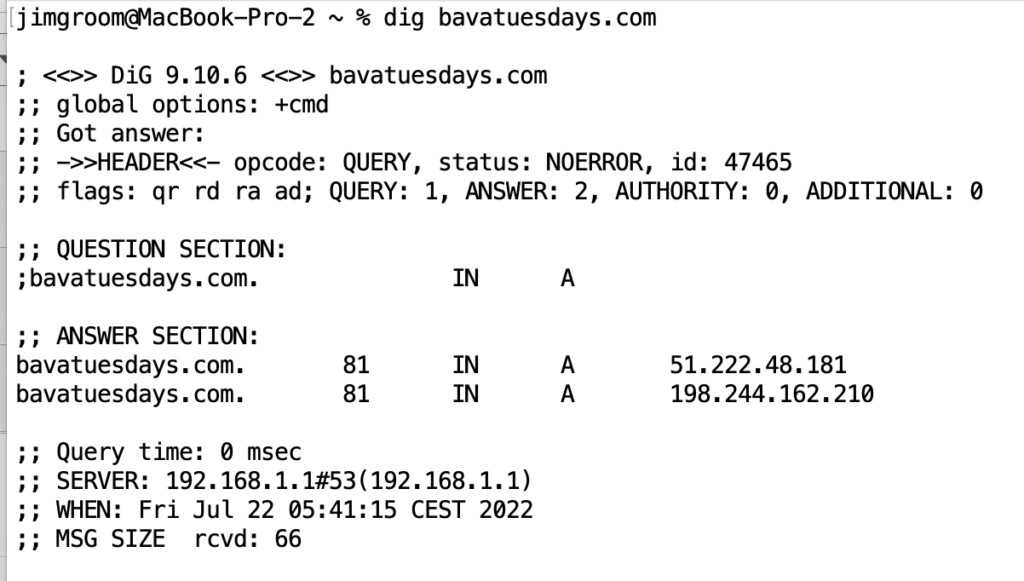

And I did a test to ensure when both servers are online both IP addresses show up:

dig bavatuesdays.com -notice that when all servers are healthy all IP addresses are listed

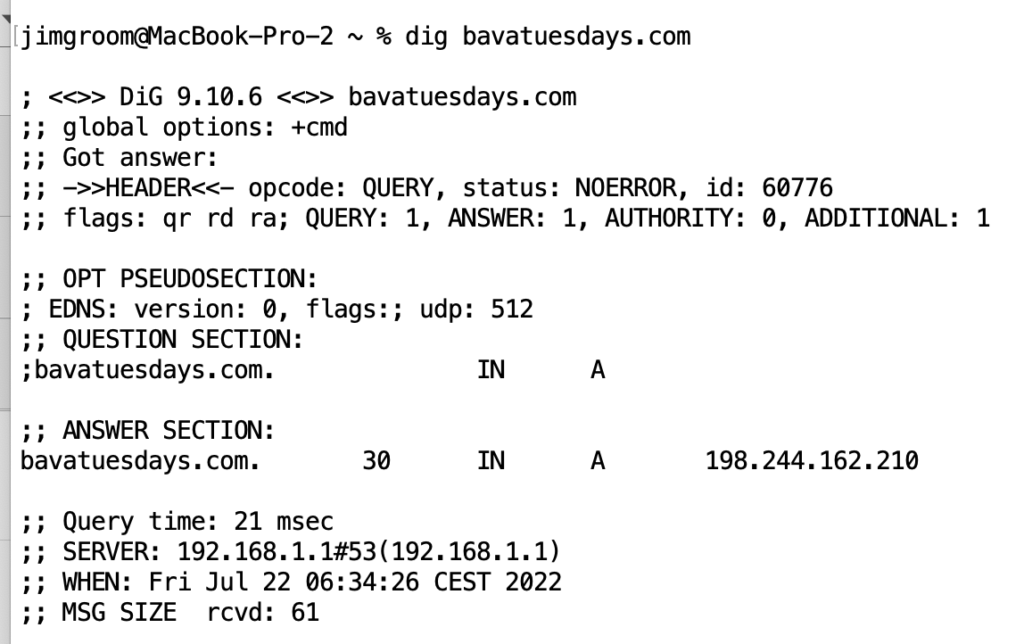

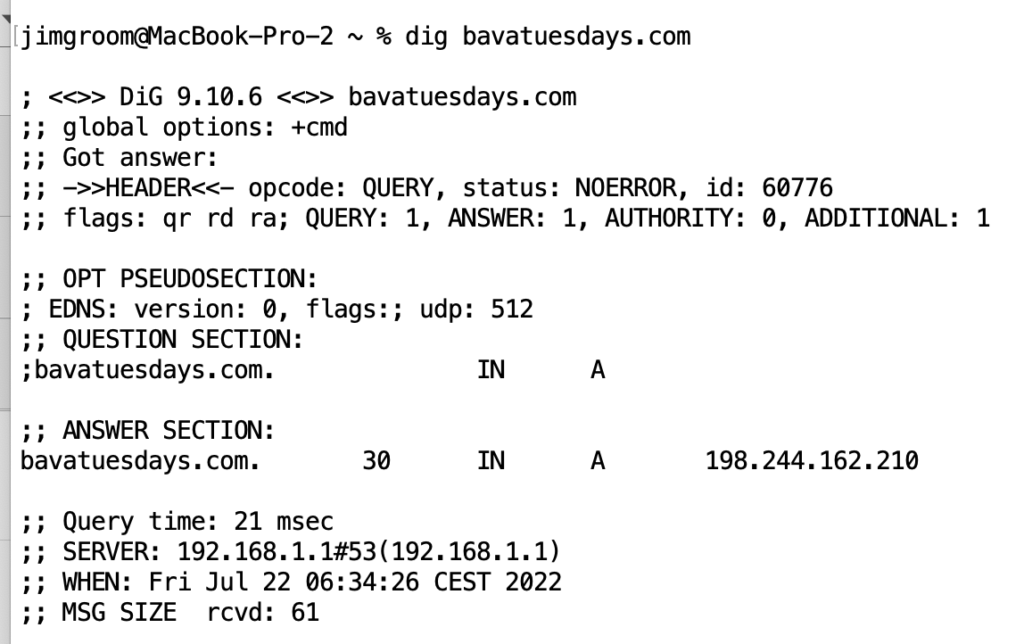

And below is a test when one server goes down, in this case the Canadian server, only the UK server IP address shows as available, which means the load balancing and fail over are working perfectly:

dig bavatuesdays.com when one of two servers is down, notice just one IP address shows, the one that works!

That is awesome. I have to say that Cloudflare is quickly becoming my new favorite tool to play with all this stuff. And Cloudflare in conjunction with the standalone WordPress Multi-Region is a powerful demonstration of how much Cloudflare can do with DNS to help you abstract your server setup to manage failover and multi-region load balancing seamlessly.

The final thing I’m playing with on this setup is using Traffic Steering in Cloudflare, which allows me to locate a server by a rough latitude and longitude so that Cloudflare can calculate how close a visitor is to which IP and steer them towards the closer server. In this way, it is essentially geo-locating visitor traffic to the closest server, which is pretty awesome—although i am not sure how to test it just yet.

So, by all indicators the third time my very well be a charm, and simple is better! But the question remains if this post will populate across both servers when published with one down, and if comments will also sync once the failed server comes back online. I’ll report more about this in the comments below….

To the first point, I think our hires in 2021 and 2022 have really solidified the questions around culture, which have been to intentionally build a team that is rooted in a support mind-set that is informed and reinforced by educational technology. It helps that Goutam, Pilot, Taylor, and Amanda all came from Domain of One’s Own programs, there understanding of higher education and a deep commitment to vision of technology to both augment and transform education is foundational to being able to both dream up and roll-out an Instructional Technology team in a few short months to start 2022. That has been a gigantic shift in Reclaim’s understanding of itself, that said what it means more specifically is still yet to be determined—which makes it that much more fun! It’s a moment where we can explore, experiment, and figure it out, which i believe is a sandbox for all kinds of magical possibilities.

To the first point, I think our hires in 2021 and 2022 have really solidified the questions around culture, which have been to intentionally build a team that is rooted in a support mind-set that is informed and reinforced by educational technology. It helps that Goutam, Pilot, Taylor, and Amanda all came from Domain of One’s Own programs, there understanding of higher education and a deep commitment to vision of technology to both augment and transform education is foundational to being able to both dream up and roll-out an Instructional Technology team in a few short months to start 2022. That has been a gigantic shift in Reclaim’s understanding of itself, that said what it means more specifically is still yet to be determined—which makes it that much more fun! It’s a moment where we can explore, experiment, and figure it out, which i believe is a sandbox for all kinds of magical possibilities.

I was going through my vinyl the other day and after listening to

I was going through my vinyl the other day and after listening to