These days I’ve been in a Mastodon maintenance state-of-mind, as my last post highlights. Early this week I tried to shore up some security issues linked with listing files publicly in our AWS S3 bucket. This encouraged me to explore additional options for object storage (the fancy name for cloud-based file storage) and I decided to try and move all the files for social.ds106.us from the AWS bucket to DigitalOcean’s Spaces, which is their S3-compatible cloud storage offering.

“Why are you doing this?”

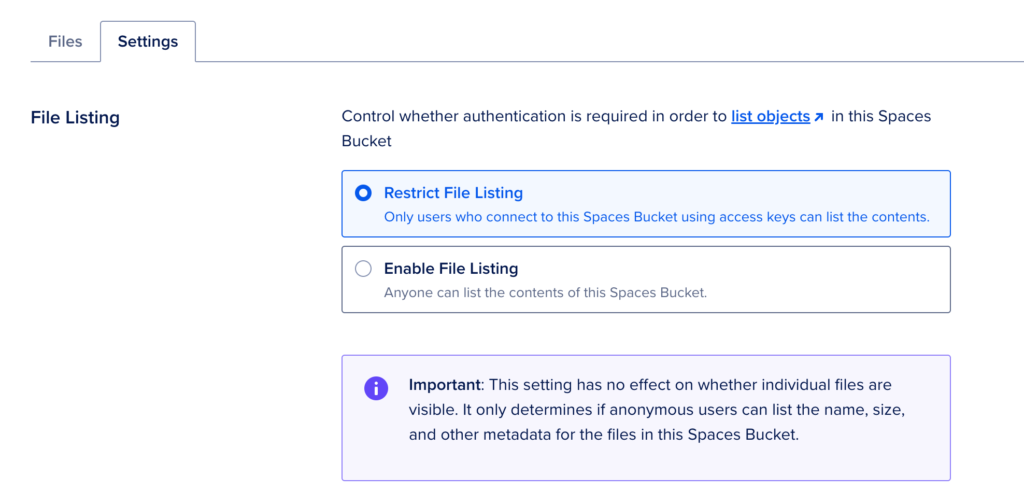

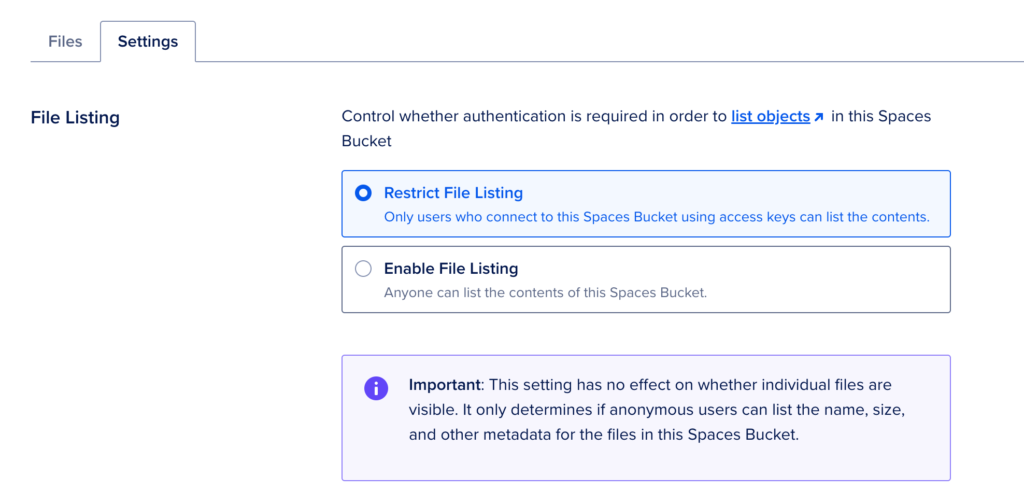

Why am I doing this? Firstly, to see if I could, and secondly because AWS is unnecessarily difficult. What annoys me about AWS is the interface remains a nightmare and a simple permissions policy like preventing listing files in a bucket becomes overly complex, which then results in potential security risks. I eventually figured out how to restrict file listing on AWS, but seeing how easy it was with my other Mastodon instance using Spaces— literally a check box—I decided to jump.

DigitalOcean’s Spaces provides option for disabling File Listing

To be clear, I didn’t take this lightly. The ds106 Mastodon server has over 30 active users, and I’m loving that small, tight community. In fact, I’m loving the work of administering Mastodon more generally, it transports me back to the joyful process of discovery when figuring out how to run WordPress Multiuser.

“Back when I had hair”

Anyway, moving people’s stuff means you can also lose their stuff, and that is not fun. So I did take a snapshot of the server before embarking on this expedition, but the service does not stop with a snapshot. Folks keep posting and the federated feed keeps pulling in new posts, so that was something I had to keep in mind along the way.

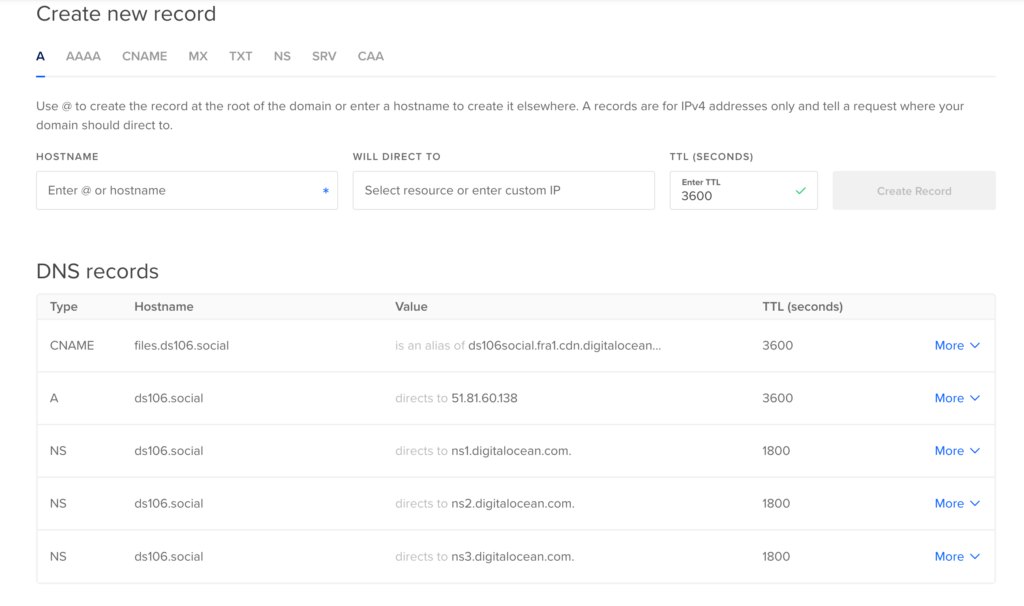

So, in order to get started I had to setup a Spaces bucket on DigitalOcean where I would move all the files from the AWS S3 bucket. Doing this is really quite simple, and you can follow DigitalOcean’s guide for “How to Create Spaces Bucket.” Keep in mind you want to restrict file listing in the settings for this bucket.

After that, we need to move the files over, and after reading another DigitalOcean guide on “How to Migrate from Amazon S3 to DigitalOcean Spaces with rclone” the best tool for the job seems to be rclone utility—but that guide is from 2017 so would love to hear if anyone has something else they prefer. You download rclone locally and setup the configuration file with the details for both AWS and Spaces.

My config file looked something like what follows, but keep in mind it is highly recommended if you’re doing this for Mastodon that you make the acl (access control list) public, not private as the rclone guide linked previously suggests. Leaving this setting as private led to a huge headache for me given I was not reading the fine print, and with over 400,000 objects being moved to Spaces, all of which were private, I had to do another pass using the s3cmd utility to make them all public—which took many hours and added an unnecessary step given these files are designed to be public.

Anyway, here is template for your rclone.conf file, keep in mind you’ll need your own access keys for each service.

[s3]

type = s3

env_auth = false

access_key_id = yourawsbucketaccesskey

secret_access_key = yourawsbucketsecretaccesskey

region = us-east-1

location_constraint = us-east-1

acl = public

[spaces]

type = s3

env_auth = false

access_key_id = yourspacesaccesskey

secret_access_key = yourspacessecretacccesskey

endpoint = fra1.digitaloceanspaces.com

acl = public

After that’s set up correctly, you can run the following command to clone all files in the AWS S3 bucket that is specified over to the DigitalOcean’s Spaces bucket specified:

rclone sync s3:reclaimsocialdev spaces:ds106social

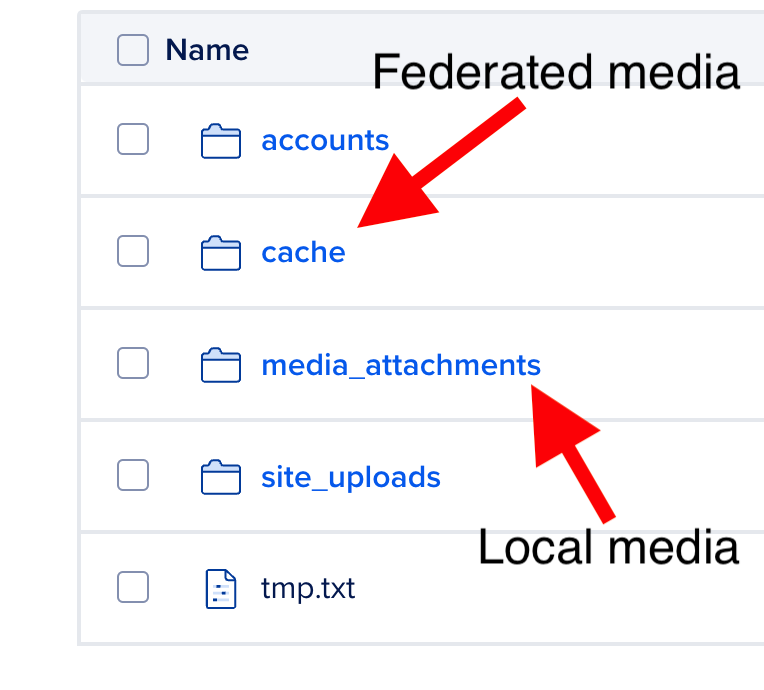

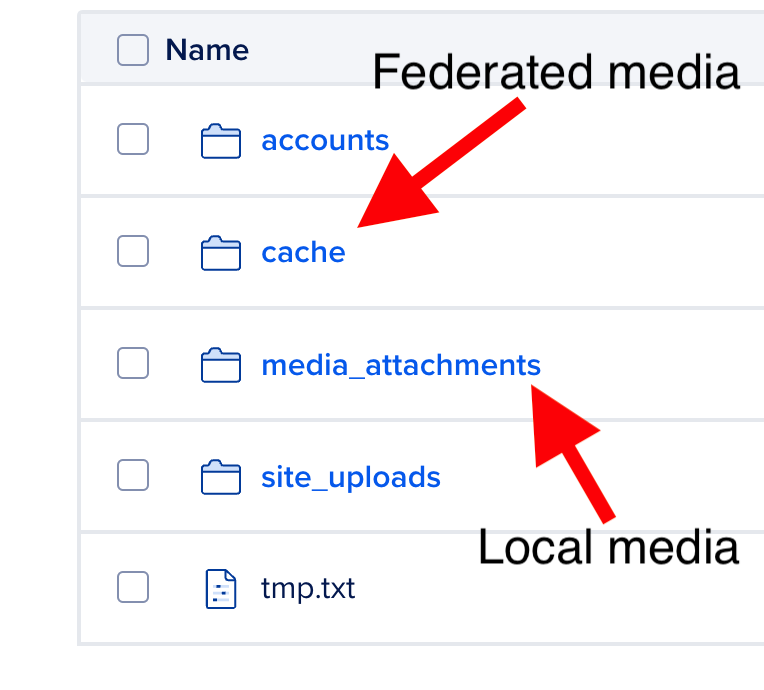

Given the sheer amount of files this took hours. And one of the things I learned when doing this is most of those files are in the cache folder given that is where all the federated posts are stored, and this is what can quickly get your Mastodon instance storage out of control. In fact, our local user files are all stored in media_attachments:

Mastodon Bucket file storage directories

The cache folder is by far the largest for the ds106 server, and knowing this allowed me to do a final clone of every other directory before switching to Spaces to make sure everything was up to date. Some of the federated media may be lost in the transition given the sheer volume, and that proved to be true for about an hour’s worth of media on this server.* Luckily it is still all on the original source, and this only effected images and thumbnails, but I do what to figure out if there is a way of re-pulling in specific federated feeds, given I tried to tun the commands for accounts refresh:

RAILS_ENV=production /home/mastodon/live/bin/tootctl accounts refresh [email protected]

As well as rebuilding all feeds, but that didn’t help either:

RAILS_ENV=production /home/mastodon/live/bin/tootctl feeds build --all

That said, it was not too big an issue given just a few images were lost in the transition from federated posts, so I was willing to swallow that loss and move on. After the cloning was finished and I made the switch I realized all the media on Spaces was private given that errant acl setting referenced above. I was chasing my tail for a bit until I figured that issue out. I had to use the s3cmd tool for the Spaces bucket to update the permissions for each directory. Rather than trying to change permissions for all 400,000 files at once, which would take too long, I just updated permissions for every directory but cache (which has the lion’s share of files on this server) and this was done in under an hour. Here are the s3cmd commands I used, and here is a useful guide for getting the s3cmd utility installed on your machine.

s3cmd setacl s3://ds106social/accounts --acl-public --recursive

s3cmd setacl s3://ds106social/media_attachments --acl-public --recursive

s3cmd setacl s3://ds106social/site_uploads --acl-public --recursive

Once these were done, I then went into each sub-directory of the cache folder and did the same for them, which will 400,000 files took forever.

s3cmd setacl s3://ds106social/cache/preview_cards --acl-public --recursive

s3cmd setacl s3://ds106social/cache/custom_emojis --acl-public --recursive

s3cmd setacl s3://ds106social/cache/accounts/avatars --acl-public --recursive

s3cmd setacl s3://ds106social/cache/accounts/headers --acl-public --recursive

Once I updated all the file permissions for every directory except cache, I could switch to Spaces and let the permissions update for the cache directory run in the background for several hours. That did mean that media for older federated posts were not resolving on the ds106 instance in the interim which was not ideal, but at the same time nothing was lost and that issue would be resolved. Moreover, all newly federated post media coming in after the switch was resolving cleanly, so there was at least a patina of normalcy.

In terms of switching, you update the .env.production file in /home/mastodon/live with the new credentials, so after updating the file my Spaces details look like the following:

S3_ENABLED=true

S3_PROTOCOL=https

S3_BUCKET=ds106social

S3_REGION=fra1

S3_HOSTNAME=fra1.digitaloceanspaces.com

S3_ENDPOINT=https://fra1.digitaloceanspaces.com

AWS_ACCESS_KEY_ID=yourspacesacccesskey

AWS_SECRET_ACCESS_KEY=yourspacessecretacccesskey

After you make these changes and save the file you can restart the web service:

systemctl restart mastodon-web

You’ll notice the region is now in Frankfurt, Germany for the ds106 Spaces bucket versus US-East in AWS, this makes it a bit more GDPR compliant given the ds106 Mastodon server is in Canada. And with that, if you don’t make the same permissions mistakes as me, your media will now be on Spaces which means you have simplified your Mastodon sysadmin life tremendously.

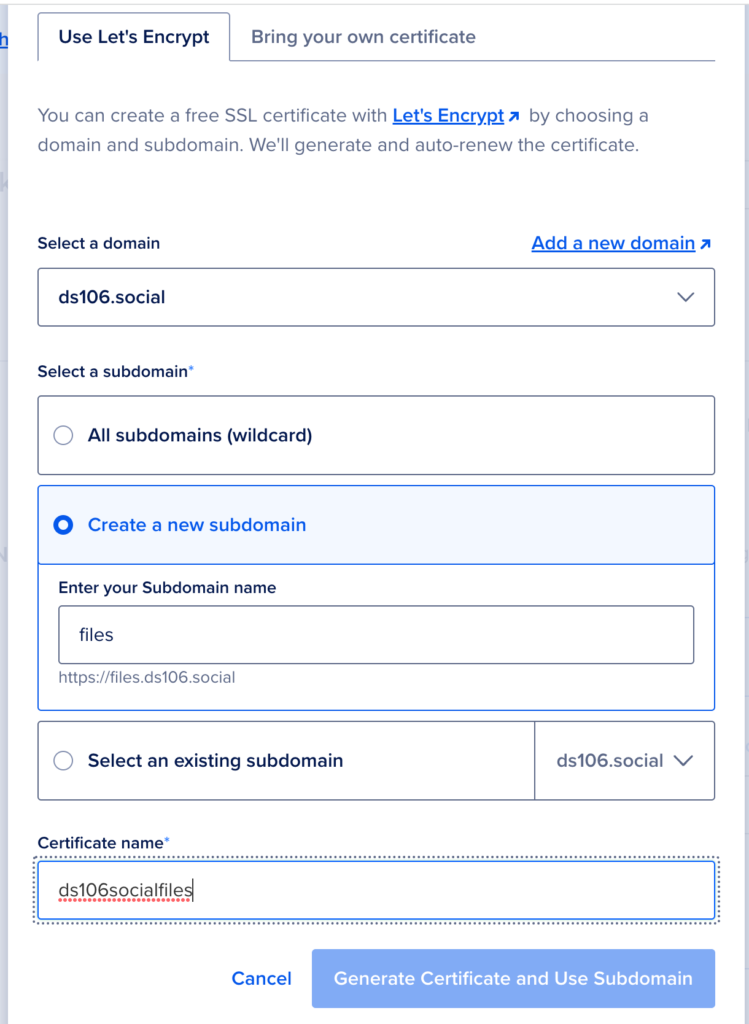

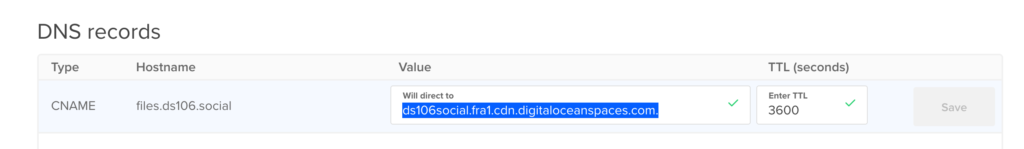

With that done and the files being successfully served from Spaces I started to get cocky. So, I decided to load all the files over a custom domain like https://ds106.social versus versus https://ds106social.fra1.digitaloceanspaces.com, but I’ll save that story for my next post….

Update: For posterity, it might be good to record that this was the StackExchange post that helped me figure out the permissions issues with the rclone transfer making all files private.

____________________________________________________

*It seems, though I’m not certain, that when trying to clone specific directories within the cached directory of media that were not synced overnight, the re-cloning of specific directories removed the existing directories/files in the cloned directory. I was hoping it would act more like rsync in that regard, but not sure it does that, which may also be a limitation of my knowledge here.

But despite all the scheming and posturing of companies large and small vying for student data, an open standard around containerization for the web was congealing and the vision of a cloud-based space for students to explore various applications was on the horizon. Docker was a huge piece of this shift. I talked a bit about the shipping metaphor at more length, this time using examples from Marc Levinson’s book The Box: How the Shipping Container Made the World Smaller and the World Economy Bigger.

But despite all the scheming and posturing of companies large and small vying for student data, an open standard around containerization for the web was congealing and the vision of a cloud-based space for students to explore various applications was on the horizon. Docker was a huge piece of this shift. I talked a bit about the shipping metaphor at more length, this time using examples from Marc Levinson’s book The Box: How the Shipping Container Made the World Smaller and the World Economy Bigger.